My python program got killed after around 400 loops, it didn't finish my list. The program is for regridding rainfall data from ESRI ascii and export as netCDF.

My system is ubuntu 18.xx, and python is version 3.7. I originally ran on jupyter lab, and I thought it might be the constrain of the jupyter lab. Then, I ran as script, but it is the same.

# Libraries

import numpy as np

import pandas as pd

import xarray as xr

import xesmf as xe

import os

# Funtion to read ESRI ASCII

def read_grd(filename):

with open(filename) as infile:

ncols = int(infile.readline().split()[1])

nrows = int(infile.readline().split()[1])

xllcorner = float(infile.readline().split()[1])

yllcorner = float(infile.readline().split()[1])

cellsize = float(infile.readline().split()[1])

nodata_value = int(infile.readline().split()[1])

lon = xllcorner + cellsize * np.arange(ncols)

lat = yllcorner + cellsize * np.arange(nrows)

value = np.loadtxt(filename, skiprows=6)

return lon, lat, value

### Static data setting ===============================

# Input variables (date)

dir_rainfall = "./HI_1994_1999/"

dir_out = "./HI_1994_1999_regridded/"

arr = sorted(os.listdir(dir_rainfall)) #len(arr) = 50404

# Read the spatial data that we're going to regridde

ds_sm = xr.open_dataset('./Spatial_Metadata.nc',autoclose=True)

ds_out = xr.open_dataset('./regrid_frame.nc')

ds_out.rename({'XLONG_M': 'lon', 'XLAT_M': 'lat'}, inplace=True)

ds_out['lat'] = ds_out['lat'].sel(Time=0, drop=True)

ds_out['lon'] = ds_out['lon'].sel(Time=0, drop=True)

### LOOP ==============================================

i = 1690 # It kept stopped every ~400 iteration, so I added this to restart from the last killed.

arr = arr[i:len(arr)]

for var in arr:

# get dateTime

dateTime = var.replace('hawaii_',"")

dateTime = dateTime.replace("z","")

print("Regridding now " + str(i) + " : " + dateTime)

# Read rainfall ASCII and calculate rain rate

asc = read_grd(dir_rainfall + var)

precip_rate = np.array(asc[2] / (60.0*60.0))

precip_rate = precip_rate.astype('float')

precip_rate[precip_rate == -9999.] = np.nan

x = np.repeat([asc[0]], 1, axis=0).transpose()

y = np.repeat([asc[1]], 1, axis=0)

# Format rain rate to netCDF

ds = xr.Dataset({'RAINRATE': (['lat', 'lon'], precip_rate)},

coords={'lon': (['lon'], asc[0]),

'lat': (['lat'], asc[1])})

# Regrid

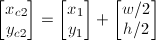

regridder = xe.Regridder(ds, ds_out, 'bilinear', reuse_weights=True) # create regriding frame

dr = ds.RAINRATE

dr_out = regridder(dr)

# change the name of coordinates for the regridded netCDF

dr_out.coords['west_east'] = ds_sm.x.values

dr_out.coords['south_north'] = ds_sm.y.values

dr_out = dr_out.rename({'west_east': 'x', 'south_north': 'y'})

# add attributes to the regridded netCDF

dr_out.attrs['esri_pe_string'] = ds_sm.crs.attrs['esri_pe_string']

dr_out.attrs['units'] = 'mm/s'

# Export the YYYYMMDDHHMMPRECIP_FORCING.nc

dr_out.to_netcdf(dir_out + dateTime + '00.PRECIP_FORCING.nc')

i = i + 1

The first ~400 ESRI ASCIIs were successfully converted. Then it was just stuck, while I ran it in jupyter lab; if I rain from a script, python xxx.py, after ~400 run, it returned me "Killed" from my terminal.

=== [edit 7/11/2019] Add memory usage information ===

According @zmike, it might be memory problem, so I printed out the memory, and it is memory problem - the memory kept accumulated.

I added the code below to my code.

# Print out total memory

process = psutil.Process(os.getpid())

print(process.memory_info().rss)