I know this problem of reading large number of small files in HDFS have always been an issue and been widely discussed, but bear with me. Most of the stackoverflow problems dealing with this type of issue concerns with reading a large number of txt files.I'm trying to read a large number of small avro files

Plus these reading txt files solutions talk about using WholeTextFileInputFormat or CombineInputFormat (https://stackoverflow.com/a/43898733/11013878) which are RDD implementations, I'm using Spark 2.4 (HDFS 3.0.0) and RDD implementations are generally discouraged and dataframes are preferred. I would prefer using dataframes but am open to RDD implementations as well.

I've tried unioning dataframes as suggested by Murtaza, but on a large number of files I get OOM error (https://stackoverflow.com/a/32117661/11013878)

I'm using the following code

val filePaths = avroConsolidator.getFilesInDateRangeWithExtension //pattern:filePaths: Array[String]

//I do need to create a list of file paths as I need to filter files based on file names. Need this logic for some upstream process

//example : Array("hdfs://server123:8020/source/Avro/weblog/2019/06/03/20190603_1530.avro","hdfs://server123:8020/source/Avro/weblog/2019/06/03/20190603_1531.avro","hdfs://server123:8020/source/Avro/weblog/2019/06/03/20190603_1532.avro")

val df_mid = sc.read.format("com.databricks.spark.avro").load(filePaths: _*)

val df = df_mid

.withColumn("dt", date_format(df_mid.col("timeStamp"), "yyyy-MM-dd"))

.filter("dt != 'null'")

df

.repartition(partitionColumns(inputs.logSubType).map(new org.apache.spark.sql.Column(_)):_*)

.write.partitionBy(partitionColumns(inputs.logSubType): _*)

.mode(SaveMode.Append)

.option("compression","snappy")

.parquet(avroConsolidator.parquetFilePath.toString)

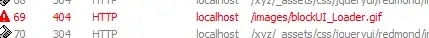

It took 1.6 mins to list 183 small files at the job level

Weirdly enough my stage UI page just shows 3s(dont understand why)

The avro files are stored in yyyy/mm/dd partitions: hdfs://server123:8020/source/Avro/weblog/2019/06/03

Is there any way I can speed the Listing of leaf files, as you can from screenshot it takes only 6s to consilidate into parquet files, but 1.3 mins to list the files