I am developing a Multi-GPU accelerated Flow solver. Currently I am trying to implement communication hiding. That means, while data is exchanged the GPU computes the part of the mesh, that is not involved in communication and computes the rest of the mesh, once communication is done.

I am trying to solve this by having one stream (computeStream) for the long run time kernel (fluxKernel) and one (communicationStream) for the different phases of communication. The computeStream has a very low priority, in order to allow kernels on the communicationStream to interleave the fluxKernel, even though it uses all resources.

These are the streams I am using:

int priority_high, priority_low;

cudaDeviceGetStreamPriorityRange(&priority_low , &priority_high ) ;

cudaStreamCreateWithPriority (&communicationStream, cudaStreamNonBlocking, priority_high );

cudaStreamCreateWithPriority (&computeStream , cudaStreamNonBlocking, priority_low );

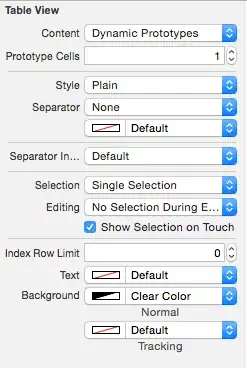

The desired cocurrency pattern looks like this:

I need synchronization of the communicationStream before I send the data via MPI, to ensure that the data is completely downloaded, before I send it on.

In the following listing I show the structure of what I am currently doing. First I start the long run time fluxKernel for the main part of the mesh on the computeStream. Then I start a sendKernel that collects the data that should be send to the second GPU and subsequently download it to the host (I cannot use cuda-aware MPI due to hardware limitations). The data is then send non-blocking per MPI_Isend and blocking receive (MPI_recv) is used subsequently. When the data is received the procedure is done backwards. First the data is uploaded to the device and then spread to the main data structure by recvKernel. Finally the fluxKernel is called for the remaining part of the mesh on the communicationStream.

Note, that before and after the shown code kernels are run on the default stream.

{ ... } // Preparations

// Start main part of computatation on first stream

fluxKernel<<< ..., ..., 0, computeStream >>>( /* main Part */ );

// Prepare send data

sendKernel<<< ..., ..., 0, communicationStream >>>( ... );

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyDeviceToHost, communicationStream );

cudaStreamSynchronize( communicationStream );

// MPI Communication

MPI_Isend( ... );

MPI_Recv ( ... );

// Use received data

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyHostToDevice, communicationStream );

recvKernel<<< ..., ..., 0, communicationStream >>>( ... );

fluxKernel<<< ..., ..., 0, communicationStream >>>( /* remaining Part */ );

{ ... } // Rest of the Computations

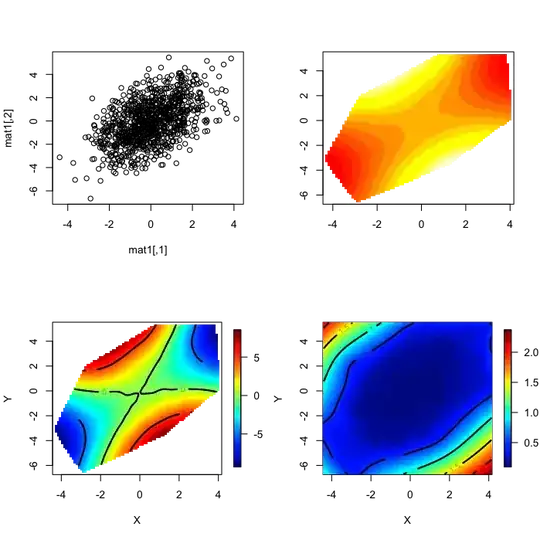

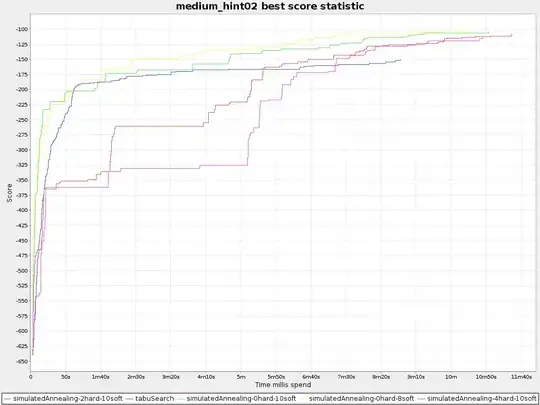

I used nvprof and Visual Profiler to see, whether the stream actually execute concurrently. This is the result:

I observe that the sendKernel (purple), upload, MPI communication and download are concurrent to the fluxKernel. The recvKernel (red) only starts ofter the other stream is finished, though. Turning of the synchronization does not solve the problem:

For my real application I have not only one communication, but multiple. I tested this with two communications as well. The procedure is:

sendKernel<<< ..., ..., 0, communicationStream >>>( ... );

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyDeviceToHost, communicationStream );

cudaStreamSynchronize( communicationStream );

MPI_Isend( ... );

sendKernel<<< ..., ..., 0, communicationStream >>>( ... );

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyDeviceToHost, communicationStream );

cudaStreamSynchronize( communicationStream );

MPI_Isend( ... );

MPI_Recv ( ... );

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyHostToDevice, communicationStream );

recvKernel<<< ..., ..., 0, communicationStream >>>( ... );

MPI_Recv ( ... );

cudaMemcpyAsync ( ..., ..., ..., cudaMemcpyHostToDevice, communicationStream );

recvKernel<<< ..., ..., 0, communicationStream >>>( ... );

The result is similar to the one with one communication (above), in the sense that the second kernel invocation (this time it is a sendKernel) is delayed till the kernel on the computeStream is finished.

Hence the overall observation is, that the second kernel invocation is delayed, independent of which kernel this is.

Can you explain, why the GPU is synchronizing in this way, or how I can get the second Kernel on communicationStream to also run concurrently to the computeStream?

Thank you very much.

Edit 1: complete rework of the question

Minimal Reproducible Example

I built a minimal reproducible Example. In the end the code plots the int data to the terminal. The correct last value would be 32778 (=(32*1024-1) + 1 + 10). At the beginning I added an option integer to test 3 different options:

- 0: Intended version with synchronisation before CPU modification of data

- 1: Same as 0, but without synchronization

- 2: dedicated stream for memcpys and no syncronization

#include <iostream>

#include <cuda.h>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

const int option = 0;

const int numberOfEntities = 2 * 1024 * 1024;

const int smallNumberOfEntities = 32 * 1024;

__global__ void longKernel(float* dataDeviceIn, float* dataDeviceOut, int numberOfEntities)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

if(index >= numberOfEntities) return;

float tmp = dataDeviceIn[index];

#pragma unroll

for( int i = 0; i < 2000; i++ ) tmp += 1.0;

dataDeviceOut[index] = tmp;

}

__global__ void smallKernel_1( int* smallDeviceData, int numberOfEntities )

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

if(index >= numberOfEntities) return;

smallDeviceData[index] = index;

}

__global__ void smallKernel_2( int* smallDeviceData, int numberOfEntities )

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

if(index >= numberOfEntities) return;

int value = smallDeviceData[index];

value += 10;

smallDeviceData[index] = value;

}

int main(int argc, char **argv)

{

cudaSetDevice(0);

float* dataDeviceIn;

float* dataDeviceOut;

cudaMalloc( &dataDeviceIn , sizeof(float) * numberOfEntities );

cudaMalloc( &dataDeviceOut, sizeof(float) * numberOfEntities );

int* smallDataDevice;

int* smallDataHost;

cudaMalloc ( &smallDataDevice, sizeof(int) * smallNumberOfEntities );

cudaMallocHost( &smallDataHost , sizeof(int) * smallNumberOfEntities );

cudaStream_t streamLong;

cudaStream_t streamSmall;

cudaStream_t streamCopy;

int priority_high, priority_low;

cudaDeviceGetStreamPriorityRange(&priority_low , &priority_high ) ;

cudaStreamCreateWithPriority (&streamLong , cudaStreamNonBlocking, priority_low );

cudaStreamCreateWithPriority (&streamSmall, cudaStreamNonBlocking, priority_high );

cudaStreamCreateWithPriority (&streamCopy , cudaStreamNonBlocking, priority_high );

//////////////////////////////////////////////////////////////////////////

longKernel <<< numberOfEntities / 32, 32, 0, streamLong >>> (dataDeviceIn, dataDeviceOut, numberOfEntities);

//////////////////////////////////////////////////////////////////////////

smallKernel_1 <<< smallNumberOfEntities / 32, 32, 0 , streamSmall >>> (smallDataDevice, smallNumberOfEntities);

if( option <= 1 ) cudaMemcpyAsync( smallDataHost, smallDataDevice, sizeof(int) * smallNumberOfEntities, cudaMemcpyDeviceToHost, streamSmall );

if( option == 2 ) cudaMemcpyAsync( smallDataHost, smallDataDevice, sizeof(int) * smallNumberOfEntities, cudaMemcpyDeviceToHost, streamCopy );

if( option == 0 ) cudaStreamSynchronize( streamSmall );

// some CPU modification of data

for( int i = 0; i < smallNumberOfEntities; i++ ) smallDataHost[i] += 1;

if( option <= 1 ) cudaMemcpyAsync( smallDataDevice, smallDataHost, sizeof(int) * smallNumberOfEntities, cudaMemcpyHostToDevice, streamSmall );

if( option == 2 ) cudaMemcpyAsync( smallDataDevice, smallDataHost, sizeof(int) * smallNumberOfEntities, cudaMemcpyHostToDevice, streamCopy );

smallKernel_2 <<< smallNumberOfEntities / 32, 32, 0 , streamSmall >>> (smallDataDevice, smallNumberOfEntities);

//////////////////////////////////////////////////////////////////////////

cudaDeviceSynchronize();

cudaMemcpy( smallDataHost, smallDataDevice, sizeof(int) * smallNumberOfEntities, cudaMemcpyDeviceToHost );

for( int i = 0; i < smallNumberOfEntities; i++ ) std::cout << smallDataHost[i] << "\n";

return 0;

}

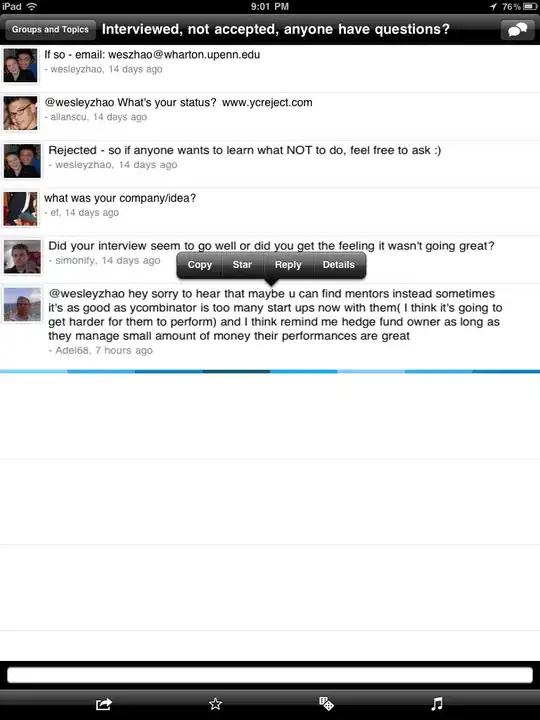

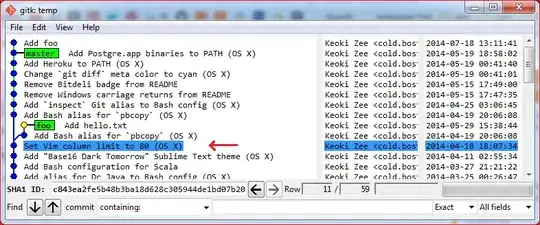

With code I see the same behavior as described above:

Option 1 (wrong reslut, +1 from CPU missing):

Option 2 (completely wrong result, all 10, dowload before smallKernel_1)

Solutions:

Running Option 0 under Linux (on the suggestion in Roberts answere), brings the expected behavior!