I'm trying to model my ETL jobs with Airflow. All jobs have kind of the same structure:

- Extract from a transactional database(N extractions, each one reading 1/N of the table)

- Then transform data

- Finally, insert the data into an analytic database

So E >> T >> L

This Company Routine USER >> PRODUCT >> ORDER has to run every 2 hours. Then I will have all the data from users and purchases.

How can I model it?

- The

Company Routine(USER >> PRODUCT >> ORDER ) must be a DAG and each job must be a separate Task? In this case, how can I model each step(E, T, L) inside the task and make them behave like "sub-tasks" in Airflow? - Or each job is a separate DAG? In this case. How can I say that I have to run The

Company Routine(USER >> PRODUCT >> ORDER ) every 2h and they have these dependencies. Because as I could see, we can set cron time and dependencies only between tasks inside a DAG.

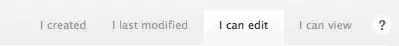

Diagram:

Now I'm using each Company Routine(USER >> PRODUCT >> ORDER ) as DAG and each job must be a separate Task.