I am trying to convert Windows wchar_t[] to a UTF-8 encoding char[] so that calls to WriteFile will produce UTF-8 encoded files. I have the following code:

#include <windows.h>

#include <fileapi.h>

#include <stringapiset.h>

int main() {

HANDLE file = CreateFileW(L"test.txt", GENERIC_ALL, 0, NULL, OPEN_ALWAYS, FILE_ATTRIBUTE_NORMAL, NULL);

const wchar_t source[] = L"hello";

char buffer[100];

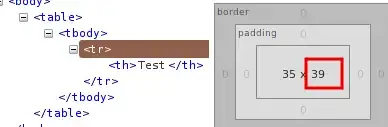

WideCharToMultiByte(CP_UTF8, 0, source, sizeof(source)/sizeof(source[0]), buffer, sizeof(buffer)/sizeof(buffer[0]), NULL, NULL);

WriteFile(file, buffer, sizeof(buffer), NULL, NULL);

return CloseHandle(file);

}

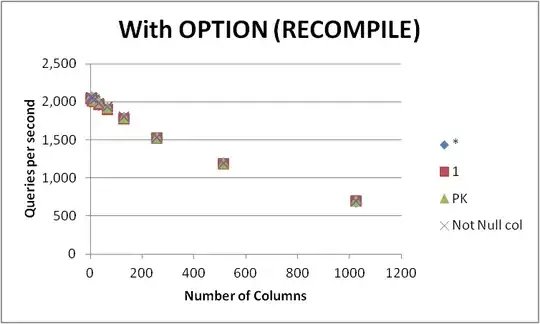

This produces a file containing: "hello" but also a large amount of garbage after it.

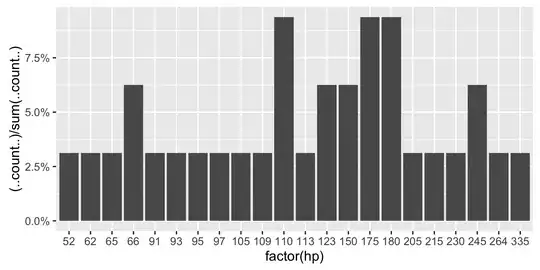

Something about this caused me to think the issue was more than just simply dumping the excess characters in buffer and that the conversion wasn't happening properly, so I changed the source text as follows:

const wchar_t source[] = L"привет";

And this time got the following garbage:

So then thinking maybe it's getting confused because it's looking for a null terminator and not finding one, even though lengths are specified? So I change the source string again:

const wchar_t source[] = L"hello\n";

And got the following garbage:

I'm fairly new to the WinAPI's, and am not primarily a C developer, so I'm sure I'm missing something, I just don't know what else to try.

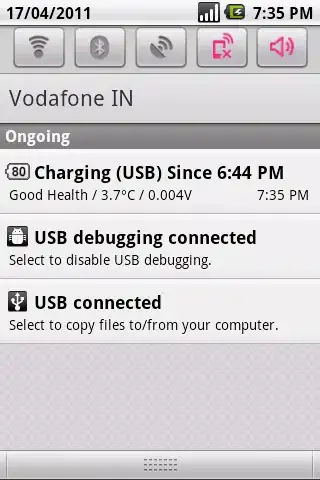

edit: Following the advice from RbMm has removed the excess garbage, so English prints correctly. However, the Russian is still garbage, just shorter garbage. Contrary to zett42's comment, I am most definately using a UTF-8 text editor.

UTF-8 doesn't need a BOM, but adding one in anyways produces:

Well that's odd. I expected the same text with a slightly larger binary size. Instead there's nothing.

edit:

Since some are keen on sticking to the idea that I'm using WordPad, here's what WordPad looks like

I'm clearly not using WordPad. I'm using VS Code, although the garbage is indentical whether opened in VS Code, Visual Studio, Notepad, or Notepad++.

edit:

Here's the hex dump of the output from Russian: