I code all my micro-service in java. I want use Multiple Consumers with Amazon SQS but each consumer has multiple instances on AWS behind a load balancer.

I use SNS for input stream

I use SQS Standard Queue after SNS.

I find the same question on stackoverflow (Using Amazon SQS with multiple consumers)

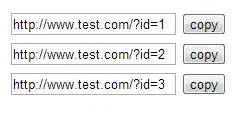

This sample is

https://aws.amazon.com/fr/blogs/aws/queues-and-notifications-now-best-friends/

When I read SQS Standard Queue documentation, I see that occasionally more than one copy of a message is delivered.:

Each message has a message_id. How to detect that there are not multiple instances of a same micro-service processes the same message that would have been sent multiple times. I got an idea by registering the message_id in a dynamodb database but if this is done by several instances of the same micro-service, how to make a lock on the get (a bit like a SELECT FOR UPDATE)?

for example multiple instances of a same micro-service "Scan Metadata".