The problem is that the 2D aspects of an image have locality. In a sense, things that are nearby are expected to be related in some fundamental way. E.g. a pixel near a hair pixel is expected to be a hair pixel, a priori. However, the different channels have no such relationship. When you only have 3 channels, a 3D convolution is equivalent to being fully connected in z. When you have 27 channels (e.g. in the middle of the net), why would any 3 channels be considered "close" to each other?

This answer explains the difference nicely.

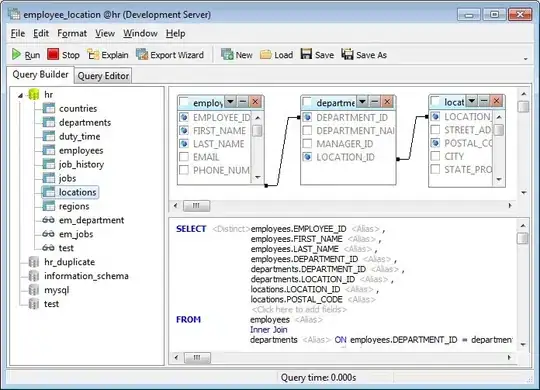

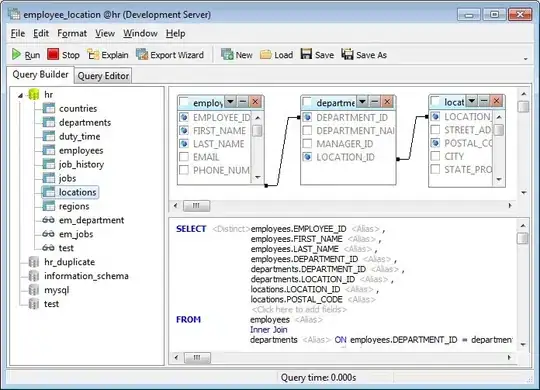

Doing a "fully-connected" relationship over the channels is what most libraries do by default. Note this line in particular: "...a filter / kernel tensor of shape [filter_height, filter_width, in_channels, out_channels]". For an input vector of size in_channels, a matrix of size [in_channels, out_channels] is fully-connected. So, the filter can be thought of as a fully-connected layer on a "patch" of image size [filter_height, filter_width].

To illustrate, on a single channel, a regular plain old image filter takes a patch of image and maps that patch to a single pixel in a new image. Like so: (image credit)

On the other hand, suppose that we have multiple channels. Instead of performing a linear mapping from a 3x3 patch to a 1x1 pixel, we perform a linear mapping from a 3x3xin_channels patch to a 1x1xout_channels set of pixels. How do we do this? Well, a linear mapping is just a matrix. Note that a 3x3xin_channels patch can be written as a vector with 3*3*in_channels entries. A 1x1xout_channels set of pixels can be written as a vector with out_channels entries. A linear mapping between the two is given by a matrix with 3*3*in_channels rows and out_channels columns. The entries of that matrix are the parameters of that layer of the network. The layer works by simply multiplying the in vector by the matrix of weights to get the out vector. This is repeated over all patches of an image. (Actually, instead of doing this in a loop over all patches, you can achieve an equivalent thing with some fanciness which is what libraries do in practice, but it gives the same result)

To illustrate, the mapping takes this 3x3xin_channels column:

To this 1x1xout_channels stack of pixels:

Now, what you are proposing is to do something with the following bit:

There is no mathematical reason why you can't do something with that 3x3x3 patch containing only 3 channels of your whole set of in_channels. However, whatever 3 channels you choose is totally arbitrary, and they have no intrinsic relationship to one another that would suggest that treating them as being "nearby" would help.

To reiterate, in an image, the pixels that are near each other are expected to be "similar" or "related" in some sense. This is why a convolution works at all. If you jumbled up the pixels and then did a convolution, it would be worthless. On that note, all of the channels are just a jumble. There is no "nearby relatedness" property along the channels. E.g. the "red" channel isn't near the "green" channel OR the "blue" channel, because "nearness" doesn't make any sense between the channels. Since "nearness" isn't a property of the channel dimension, then doing a convolution in that dimension probably isn't going to be useful.

On the other hand, we can simply take the input of ALL of the in_channels to generate the output from ALL of the out_channels simultaneously, and let them influence each other in a linear sort of way. Note that the linear transformation described involves a sort of cross-pollination of the parameters. For example, for a layer at the top of the network, taking in a 3x3 patch of r,g,b channels labeled r_1_1-r_3_3 etc., a single pixel in a single channel of the output from that patch would look like:

A*r_1_1 + B*r_1_2 + ... C*r_3_3 + D*b_1_1 + E*b_1_2 + ... F*b_3_3 + G*g_1_1 + ...

Where the capital letters are entries of the weight matrix.

So your observation: "Would a strictly 2D CNN would perform poorly?" is based on an assumption that the convolutional layer doesn't include any "mixing" between the various channels. This is not the case. The in_channels are ALL combined in a linear mapping to obtain the out_channels.