I have a web application which converts some PCL files to PDF by running a scheduler task every 10 seconds. Each time it takes max. 20 pc files from a directory and convert them to pdf.

First few tasks run nice but step by step it gets slower and suddenly a GC overhead limit exceeded error message is raised.

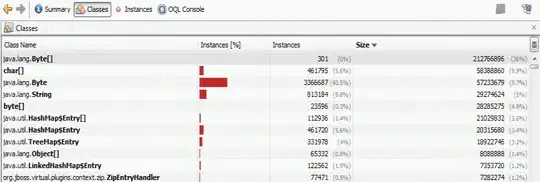

I've tried to analyze this memory leak with VisualVM and here is some output from the heapdump:

It's strange that such amount of bytes instances (3.366.687 !!!) it's shown. In the application I also have Streams and I really checked to see if all this streams are closed when their related operations are done.

I use byte in a single class in three methods : byte[] buf = new byte[1024];

public void initBuf() {

if (buf != null) {

for (int i = 0; i < buf.length; i++) {

buf[i] = (byte) 0x00;

}

pdf_y = PageSize.A4.getHeight();

}

}

public void appendBuf(char ch) {

if (ch == '\n') {

processChunk();

drawChunks();

pdf_newline();

} else if (ch != '\r') {

buf[buf_index++] = (byte) (0xff & ch);

}

}

public void resetBuf() {

for (int i = buf_index; i >= 0; i--) {

buf[i] = (byte) 0x00;

}

buf_index = 0;

}

Where would you advice me to look? How can I really identify which of my code is doing this harmfull?

I cannot post all the code because there are more classes which do this conversion but I really hope you to help me with some advices becauise I spent a lot of time and still this memory issue persists.

Thanks in advance