It was around 8-9 years ago I saw a tool for Visual Studio (I don't really remember the name) which can visualize the function calls and their performance. I really liked it so I was wondering if there is anything similar to that in Python. Let's say you have three functions:

def first_func():

...

def second_func():

...

for i in xrange(10):

first_function()

...

def third_func():

...

for i in xrange(5):

second_function()

...

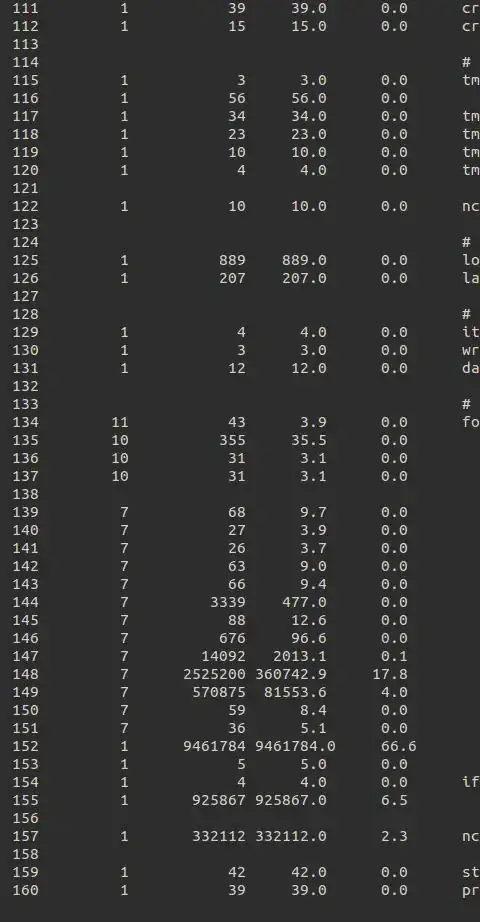

So, the final report of that tool was something like this (including connection diagrams):

first_func[avg 2ms] <--50 times--< second_func[avg 25ms] <--5 times--< third_func[avg 140ms]

A tool like this would make it easier to find the bottlenecks into a system. Especially for the large systems.