I am using 24 cores virtual CPU and 100G memory to training Doc2Vec with Gensim, but the usage of CPU always is around 200% whatever to modify the number of cores.

top

htop

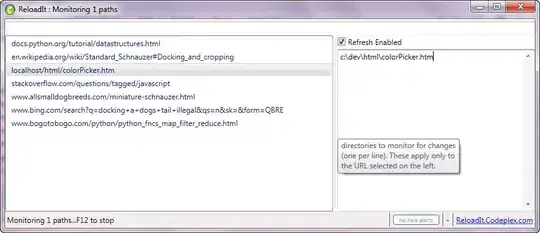

The above two pictures showed the percentage of cpu usage, this pointed out that cpu wasn't used efficiently.

cores = multiprocessing.cpu_count()

assert gensim.models.doc2vec.FAST_VERSION > -1, "This will be painfully slow otherwise"

simple_models = [

# PV-DBOW plain

Doc2Vec(dm=0, vector_size=100, negative=5, hs=0, min_count=2, sample=0,

epochs=20, workers=cores),

# PV-DM w/ default averaging; a higher starting alpha may improve CBOW/PV-DM modes

Doc2Vec(dm=1, vector_size=100, window=10, negative=5, hs=0, min_count=2, sample=0,

epochs=20, workers=cores, alpha=0.05, comment='alpha=0.05'),

# PV-DM w/ concatenation - big, slow, experimental mode

# window=5 (both sides) approximates paper's apparent 10-word total window size

Doc2Vec(dm=1, dm_concat=1, vector_size=100, window=5, negative=5, hs=0, min_count=2, sample=0,

epochs=20, workers=cores),

]

for model in simple_models:

model.build_vocab(all_x_w2v)

print("%s vocabulary scanned & state initialized" % model)

models_by_name = OrderedDict((str(model), model) for model in simple_models)

Edit:

I tried to use parameter corpus_file instead of documents, and resolved above problem. but, I need to adjust the code and convert all_x_w2v to file, and all_x_w2v didn't directly do this.