Im retyping a processing script into python so i can use it in a gimp-python script. Im having trouble with strokeWeight(0.3), which means a thickness of less than 1 pixel.

I have followed suggestions as to blend the rgb colors with alpha but that didnt work. It would seem that processing is doing something else. I have tried to look up the strokeWeight function in the processing sourcecode but i couldnt really make sense of it. I have also tried to compare color values with an strokeWeight of 1 against those with an strokeWeight of 0.3 but i also couldnt make sense of it.

Does anybody know what processing does when setting strokeWeight to 0.3, how would one simulate this?

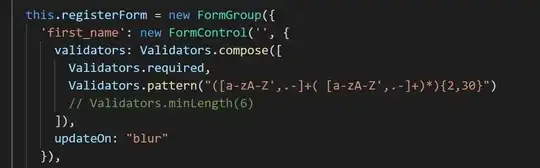

The script i am using is:

String filename = "image2";

String fileext = ".jpg";

String foldername = "./";

// run, after 30 iterations result will be saved automatically

// or press SPACE

int max_display_size = 800; // viewing window size (regardless image size)

/////////////////////////////////////

int n=2000;

float [] cx=new float[n];

float [] cy=new float[n];

PImage img;

int len;

// working buffer

PGraphics buffer;

String sessionid;

void setup() {

sessionid = hex((int)random(0xffff),4);

img = loadImage(foldername+filename+fileext);

buffer = createGraphics(img.width, img.height);

buffer.beginDraw();

//buffer.noFill();

//buffer.smooth(8);

//buffer.strokeWeight(1);

buffer.strokeWeight(0.3);

buffer.background(0);

buffer.endDraw();

size(300,300);

len = (img.width<img.height?img.width:img.height)/6;

background(0);

for (int i=0; i<n; i++) {

cx[i] = i; // changed to a none random number for testing

cy[i] = i;

//for (int i=0;i<n;i++) {

// cx[i]=random(img.width);

// cy[i]=random(img.height);

}

}

int tick = 0;

void draw() {

buffer.beginDraw();

for (int i=1;i<n;i++) {

color c = img.get((int)cx[i], (int)cy[i]);

buffer.stroke(c);

buffer.point(cx[i], cy[i]);

// you can choose channels: red(c), blue(c), green(c), hue(c), saturation(c) or brightness(c)

cy[i]+=sin(map(hue(c),0,255,0,TWO_PI));

cx[i]+=cos(map(hue(c),0,255,0,TWO_PI));

}

if (frameCount>len) {

frameCount=0;

println("iteration: " + tick++);

//for (int i=0;i<n;i++) {

// cx[i]=random(img.width);

// cy[i]=random(img.height);

for (int i=0; i<n; i++) {

cx[i] = i; // changed to a none random number for testing

cy[i] = i;

}

}

buffer.endDraw();

if(tick == 30) keyPressed();

image(buffer,0,0,width,height);

}

void keyPressed() {

buffer.save(foldername + filename + "/res_" + sessionid + hex((int)random(0xffff),4)+"_"+filename+fileext);

println("image saved");

}

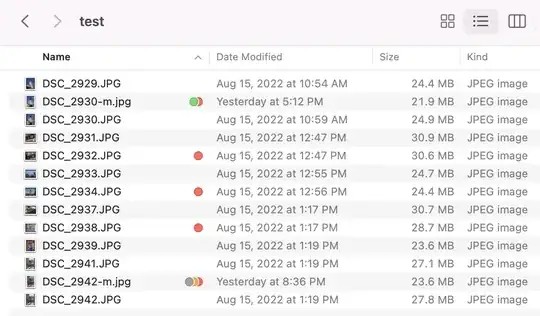

Here are some test results, (scaled 200%):

(it should be noted that this is a fairly straight line for comparison purpose. The actual code uses random coordinates.)