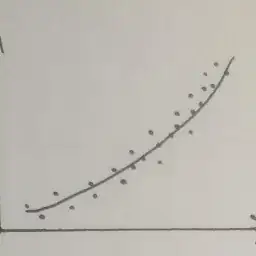

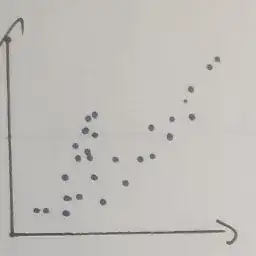

I am currently trying to classify a bunch of rivers in regard to their behavior. Many of the rivers have a behavior that is very similar to a second degree polynom.

However, some of the rivers have some areas where they diverge from this pattern.

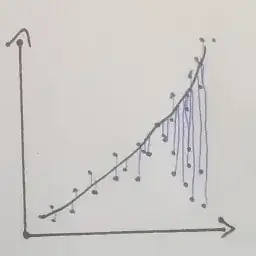

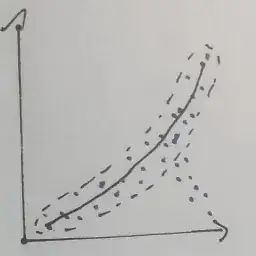

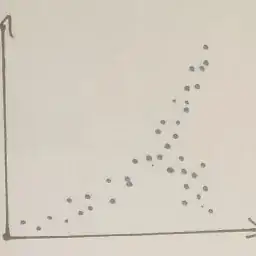

I want to classify this by calculating how far all points are away from the simple polynom. So it would basically look something like this:

But to be able to do this, I have to calculate the polynom for only those points that are "normal behavior". Otherwise my polynom is shifted to the direction of the diverging behavior and I cannot calculate the distances correctly.

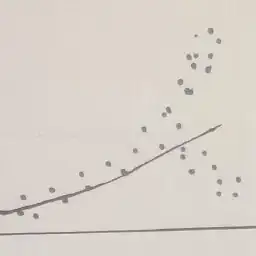

Here is some example data.

x_test = [-150,-140,-130,-120,-110,-100,-90,-80,-70,-60,-50,-40,-30,-20,-10,0,10,20,30,40,50,60,70,70,80,80,90,90,100,100]

y_test = [0.1,0.11,0.2,0.25,0.25,0.4,0.5,0.4,0.45,0.6,0.5,0.5,0.6,0.6,0.7, 0.7,0.65,0.8,0.85,0.8,1,1,1.2,0.8,1.4,0.75,1.4,0.7,2,0.5]

I can create a polynom from it with numpy.

fit = np.polyfit(x_test, y_test, deg=2, full=True)

polynom = np.poly1d(fit[0])

simulated_data = polynom(x)

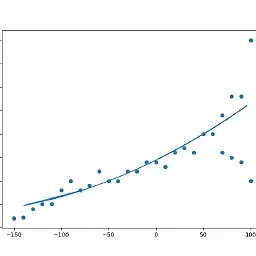

When I plot it, I get the following:

ax = plt.gca()

ax.scatter(x_test,y_test)

ax.plot(x, simulated_data)

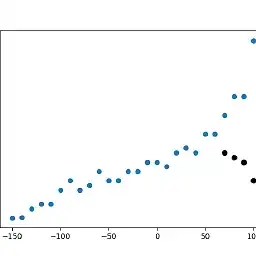

As you can see, the polynom is shifted slightly downward, which is caused by the points marked black here:

Is there a straightforward way to find those points that do not follow the main trend and exclude them for creating the polynom?