I have two dataframes mapp and data like as shown below

mapp = pd.DataFrame({'variable': ['d22','Studyid','noofsons','Level','d21'],'concept_id':[1,2,3,4,5]})

data = pd.DataFrame({'sourcevalue': ['d22heartabcd','Studyid','noofsons','Level','d21abcdef']})

I would like fetch a value from data and check whether it is present in mapp, if yes, then get the corresponding concept_id value. The priority is to first look for an exact match. If no match is found, then go for substring match. As I am dealing with more than million records, any scalabale solution is helpful

s = mapp.set_index('variable')['concept_id']

data['concept_id'] = data['sourcevalue'].map(s)

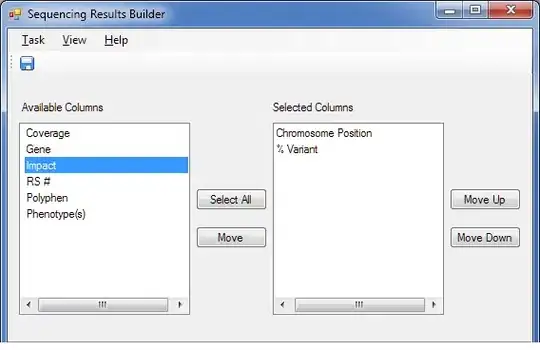

produces an output like below

When I do substring match, valid records also become NA as shown below

data['concept_id'] = data['sourcevalue'].str[:3].map(s)

I don't know why it's giving NA for valid records now

How can I do this two checks at once in an elegant and efficient manner?

I expect my output to be like as shown below