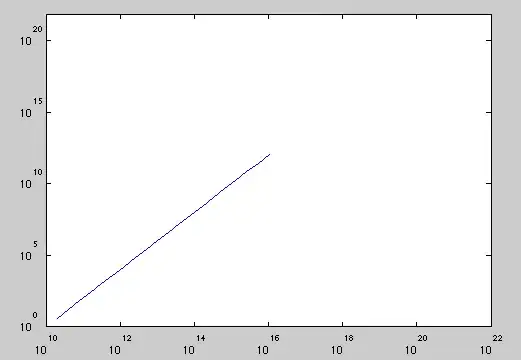

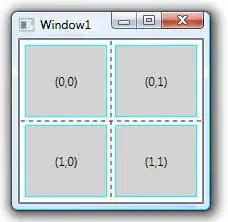

I have configured a flow as follows:

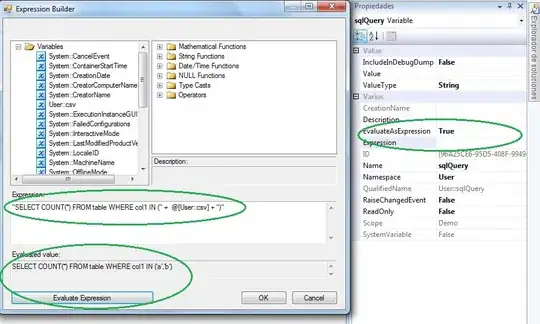

GetFileSplitText-> splitting into flowfilesExtractText-> adding attributes with two keysPutDistributedMapCache-> Cache Entry Identifier is ${Key1}_${Key2}

Now I configured one sample GenerateFlowFile which generates a sample record and then goes into LookupRecord ( concat(/Key1,'_',/Key2)) which looks for the same key in cache.

I see a problem in my caching flow because when I configure a GenerateFlowFile to cache same records , I am able to do lookup

This flow is not able to lookup. Please help

PutDistributedMapCache

ExtractText

Lookup flow

LookupRecord Config

I have added four keys in total because that is my business use case.

I have a csv file with 53 records and I use Splitfile to split each record and add attributes which act as my key which I store in PutDistributedMapcache. Now I have a different flow where in I start with a GenerateFlowFile which generates a record like this :

So I expect my LookupKeyRecord which has a jsonreader and jsonwriter to read this record , lookup with the key in the distributedcache and populate the /Feedback field in my record.

This fails to look up records and records goes as UNMATCHED.

Now the catch is lets say I remove GetFile and use a GenerateFlowFile with this config to cache :

so my lookup works with the keys 9_9_9_9. But the moment I add another set of records with different keys , my lookup fails.