I have very high resolution Engineering drawings/Circuit diagrams which contain text in a number of different regions. The aim is to extract text from such Images.

I am using pytesseract for this task. Applying pytesseract directly is not possible as in that case text from different regions gets jumbled up in the output. So I am identifying different bounding boxes containing the text and then iteratively passing these regions to pytesseract. The bounding box logic is working fine however sometimes I get no text from the cropped Image or partial text only. I would understand if the cropped images were low res or blurred but this is not the case. Please have a look at the attached couple of examples.

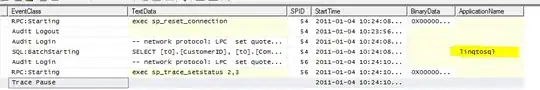

Image 1

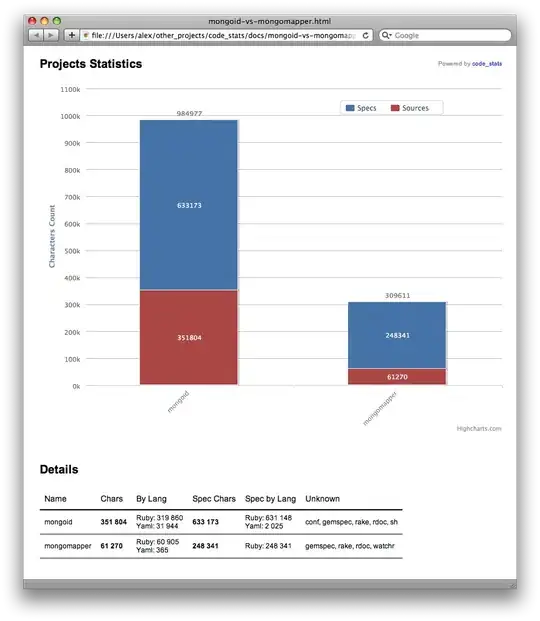

Image 2

Here is my code to get the text:

source_img_simple = cv2.imread('image_name.tif')

source_img_simple_gray = cv2.cvtColor(source_img_simple, cv2.COLOR_BGR2GRAY)

img_text = pytesseract.image_to_string(source_img_simple_gray)

# Export the text file

with open('Output_OCR.txt', 'w') as text:

text.write(img_text)

Actual result for first image- No output (Blank text file) For 2nd Image- Partial text (ALL MISCELLANEOUS PIPING AND CONNECTION SIZES) I'm trying to know how to improve the quality of the OCR. I'm open to using any other tools as well (apart from pytesseract) if required. But can't use API's (Google, AWS etc.) as that is a restriction. Note: I have gone through the below post and it is not duplicate of my case as I have black text on white background: