In Matlab, I am trying to vectorise my code to improve the simulation time. However, the result I got was that I deteriorated the overall efficiency.

To understand the phenomenon I created 3 distinct functions that does the same thing but with different approach :

The main file :

clc,

clear,

n = 10000;

Value = cumsum(ones(1,n));

NbLoop = 10000;

time01 = zeros(1,NbLoop);

time02 = zeros(1,NbLoop);

time03 = zeros(1,NbLoop);

for test = 1 : NbLoop

tic

vector1 = function01(n,Value);

time01(test) = toc ;

tic

vector2 = function02(n,Value);

time02(test) = toc ;

tic

vector3 = function03(n,Value);

time03(test) = toc ;

end

figure(1)

hold on

plot( time01, 'b')

plot( time02, 'g')

plot( time03, 'r')

The function 01:

function vector = function01(n,Value)

vector = zeros( 2*n,1);

for k = 1:n

vector(2*k -1) = Value(k);

vector(2*k) = Value(k);

end

end

The function 02:

function vector = function02(n,Value)

vector = zeros( 2*n,1);

vector(1:2:2*n) = Value;

vector(2:2:2*n) = Value;

end

The function 03:

function vector = function03(n,Value)

MatrixTmp = transpose([Value(:), Value(:)]);

vector = MatrixTmp (:);

end

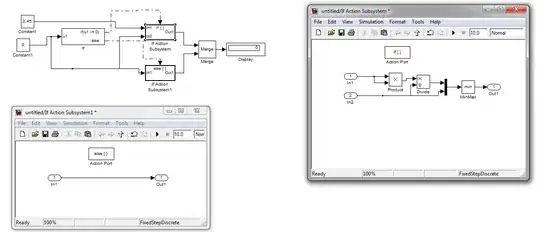

The blue plot correspond to the for - loop.

n = 100:

n = 10000:

When I run the code with n = 100, the more efficient solution is the first function with the for loop.

When n = 10000 The first function become the less efficient.

- Do you have a way to know how and when to properly replace a for-loop by a vectorised counterpart?

- What is the impact of index searching with array of tremendous dimensions ?

- Does Matlab compute in a different manner an array of dimensions 3 or higher than a array of dimension 1 or 2?

- Is there a clever way to replace a while loop that use the result of an iteration for the next iteration?