I am trying to plot boundary lines of Iris data set using LDA in sklearn Python based on this documentation.

For two dimensional data, we can easily plot the lines using LDA.coef_ and LDA.intercept_.

But for multidimensional data that has been reduced to two components, the LDA.coef_ and LDA.intercept has many dimensions which I don't know how to use these to plot the boundary lines in 2D reduced-dimension plot.

I've tried to plot using only the first two-element of LDA.coef_ and LDA.intercept, but It didn't work.

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

iris = datasets.load_iris()

X = iris.data

y = iris.target

target_names = iris.target_names

lda = LinearDiscriminantAnalysis(n_components=2)

X_r2 = lda.fit(X, y).transform(X)

x = np.array([-10,10])

y_hyperplane = -1*(lda.intercept_[0]+x*lda.coef_[0][0])/lda.coef_[0][1]

plt.figure()

colors = ['navy', 'turquoise', 'darkorange']

lw = 2

plt.plot(x,y_hyperplane,'k')

for color, i, target_name in zip(colors, [0, 1, 2], target_names):

plt.scatter(X_r2[y == i, 0], X_r2[y == i, 1], alpha=.8, color=color,

lw=lw,

label=target_name)

plt.legend(loc='best', shadow=False, scatterpoints=1)

plt.title('LDA of IRIS dataset')

plt.show()

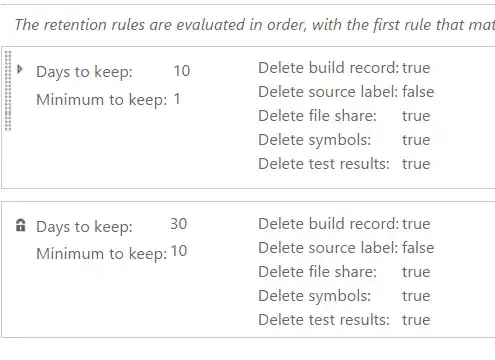

Result of boundary line produced by lda.coef_[0] and lda.intercept[0] showed a line that isn't likely to separate between two classes

I've tried using np.meshgrid to draw areas of the classes. But I get an error like this

ValueError: X has 2 features per sample; expecting 4

which expecting 4 dimensional of original data, instead of 2D points from the meshgrid.