I need to read data from a post gres server and put it into an array / data from. Each row has a source and and a destination field. I need to add these into an array cummulatively. As i iterate through the data frame, if the source and destination fields of are not in the accounts column, I need to add them into it.

Here is what my code currently looks like (excluding the postgres parts for brevity_)

# Load the data

data = pd.read_sql(sql_command, conn)

# taking a subet of the data until algorithm is perfected.

seed = np.random.seed(42)

n = data.shape[0]

ix = np.random.choice(n,10000)

df_tmp = data.iloc[ix]

# Taking the source and destination and combining it into a list in another column

df_tmp['accounts'] = df_tmp.apply(lambda x: [x['source'], x['destination']], axis=1)

# Attempt at cummulatively adding accounts to columns

for index, row in df_tmp.iterrows():

if 'accounts' not in df_tmp:

df_tmp['accounts'] = df_tmp.apply(lambda x: [x['accounts'], x['source'],x['destination']], axis=1)

else:

df_tmp['accounts'] = df_tmp['accounts']

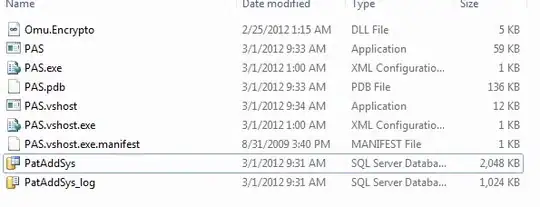

Here is what my data should look like:

Questions:

- Is this the right way to do this?

- The final row will have about 1 million accounts, which would make this very very expensive. Is this a more efficient way to represent this?