I'm developing a Flutter plugin for Android and iOS using the Flutter plugin camera. First I stream the frame from the plugin using this method:

controller.startImageStream((CameraImage img) {

runlOnFrame(

bytesList: img.planes.map((plane) {

return plane.bytes;

}).toList(),

imageHeight: img.height,

imageWidth: img.width,

)

}

The runlOnFrame method is developed in Java and it does the conversion from YUV bytes to bitmap in RGBA format like this:

Get Argument Function :

getArgs(HashMap args){

List<byte[]> bytesList = (ArrayList) args.get("bytesList");

int imageHeight = (int) (args.get("imageHeight"));

int imageWidth = (int) (args.get("imageWidth"));

}

runOnFrame(List<byte[]> bytesList, int imageHeight, int imageWidth, ) throws IOException {

int rotation = 90;

ByteBuffer Y = ByteBuffer.wrap(bytesList.get(0));

ByteBuffer U = ByteBuffer.wrap(bytesList.get(1));

ByteBuffer V = ByteBuffer.wrap(bytesList.get(2));

int Yb = Y.remaining();

int Ub = U.remaining();

int Vb = V.remaining();

byte[] data = new byte[Yb + Ub + Vb];

Y.get(data, 0, Yb);

V.get(data, Yb, Vb);

U.get(data, Yb + Vb, Ub);

Bitmap bitmapRaw = Bitmap.createBitmap(imageWidth, imageHeight, Bitmap.Config.ARGB_8888);

Allocation bmData = renderScriptNV21ToRGBA888(

mRegistrar.context(),

imageWidth,

imageHeight,

data);

bmData.copyTo(bitmapRaw);

Matrix matrix = new Matrix();

matrix.postRotate(rotation);

Bitmap finalbitmapRaw = Bitmap.createBitmap(bitmapRaw, 0, 0, bitmapRaw.getWidth(), bitmapRaw.getHeight(), matrix, true);

saveBitm(finalbitmapRaw); // Function to save converted bitmap

}

public Allocation renderScriptNV21ToRGBA888(Context context, int width, int height, byte[] nv21) {

// https://stackoverflow.com/a/36409748

RenderScript rs = RenderScript.create(context);

ScriptIntrinsicYuvToRGB yuvToRgbIntrinsic = ScriptIntrinsicYuvToRGB.create(rs, Element.U8_4(rs));

Type.Builder yuvType = new Type.Builder(rs, Element.U8(rs)).setX(nv21.length);

Allocation in = Allocation.createTyped(rs, yuvType.create(), Allocation.USAGE_SCRIPT);

Type.Builder rgbaType = new Type.Builder(rs, Element.RGBA_8888(rs)).setX(width).setY(height);

Allocation out = Allocation.createTyped(rs, rgbaType.create(), Allocation.USAGE_SCRIPT);

in.copyFrom(nv21);

yuvToRgbIntrinsic.setInput(in);

yuvToRgbIntrinsic.forEach(out);

return out;

}

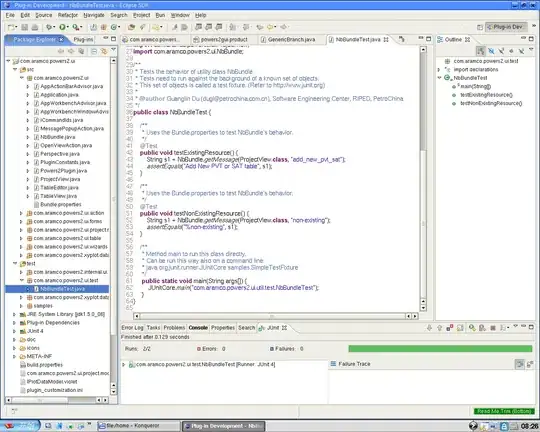

The problem is i'm getting green bitmap images like this: