I'm developing a Django app. Use-case scenario is this:

50 users, each one can store up to 300 time series and each time serie has around 7000 rows.

Each user can ask at any time to retrieve all of their 300 time series and ask, for each of them, to perform some advanced data analysis on the last N rows. The data analysis cannot be done in SQL but in Pandas, where it doesn't take much time... but retrieving 300,000 rows in separate dataframes does!

Users can also ask results of some analysis that can be performed in SQL (like aggregation+sum by date) and that is considerably faster (to the point where I wouldn't be writing this post if that was all of it).

Browsing and thinking around, I've figured storing time series in SQL is not a good solution (read here).

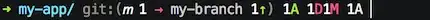

Ideal deploy architecture looks like this (each bucket is a separate server!):

Problem: time series in SQL are too slow to retrieve in a multi-user app.

Researched solutions (from this article):

Arctic: https://github.com/manahl/arctic

Here are some problems:

1) Although these solutions are massively faster for pulling millions of rows time series into a single dataframe, I might need to pull around 500.000 rows into 300 different dataframes. Would that still be as fast?

This is the current db structure I'm using:

class TimeSerie(models.Model):

...

class TimeSerieRow(models.Model):

date = models.DateField()

timeserie = models.ForeignKey(timeserie)

number = ...

another_number = ...

And this is the bottleneck in my application:

for t in TimeSerie.objects.filter(user=user):

q = TimeSerieRow.objects.filter(timeserie=t).orderby("date")

q = q.filter( ... time filters ...)

df = pd.DataFrame(q.values())

# ... analysis on df

2) Even if PyStore or Arctic can do that faster, that'd mean I'd loose the ability to decouple my db from the Django instances, effictively using resources of one machine way better, but being stuck to use only one and not being scalable (or use as many separate databases as machines). Can PyStore/Arctic avoid this and provide an adapter for remote storage?

Is there a Python/Linux solution that can solve this problem? Which architecture I can use to overcome it? Should I drop the scalability of my app and/or accept that every N new users I'll have to spawn a separate database?