i am installing spark on my machine- Window server 2008r2. I have followed the below step.

Install JDK

Add to Environmental Variable

- Add winutil as Hadoop_Home in Env. Variable

- Download Spark and configured env variable as SPARK_HOME

i have not installed scala.

when i tried to call spark from command line getting below error.

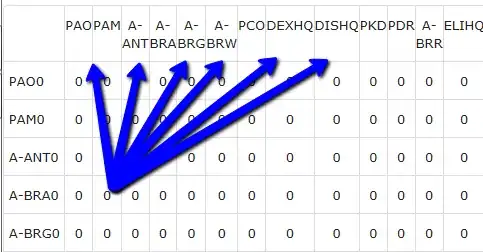

enter image description here

Please help me how can i fix this. i have admin account on this server, and i set the path in system variables.