I´m new to the area of Data Science and especially to the topic of modeling a neural network in RStudio.

I´m trying to do multiclass classification using a simple Keras dense network and predict 5 classes with it. My total dataset is 12 input indicators for almost 35k instances (so 12x34961). When I use the fit-function to train the model on 80% of the data over 100 epochs the loss is barely declining (1,57 to 1,55) and the accuracy stays level at 0.26.

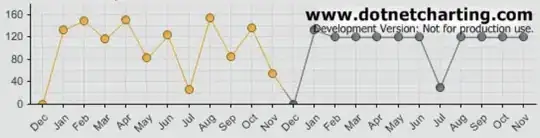

So the model is not learning similar to this, but in my case, I don´t have a deep LSTM network and also using sigmoid as an activation function in the last layer did not improve the results.

So far I have tried to play around with:

-using only a (small) subset of data

-changing "loss" and "optimizer"

-using a way more aggressive learning rate (SDG with lr=0.3)

-using deeper networks i.e. 6/7 layers with thousands of neurons

-using "class_weigth" argument to address the slight class imbalance

Here is the core code:

# Load data with 12 Input and one Output

summary(data)

#############

# Categorise Output into five different classes

# L5 = 0-1

# L4 = 2-3

# L3 = 4-8

# L2 = 9-22

# L1 = 23+

#

# for caution purposes manipulation is done in an extra dataframe

datatest <- data

names(datatest)[ncol(datatest)]<- "FC_3"

#

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3>=23, "L1"))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, (FC_3>=4 & FC_3 <= 8), "L3"))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3 ==2| FC_3==3, "L4"))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3 ==0| FC_3==1, "L5"))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3 ==9, "L2"))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, (FC_3==11 | FC_3==10 | FC_3==12 | FC_3==13 | FC_3==14 | FC_3==15 | FC_3==16 | FC_3==17 | FC_3==18 | FC_3==19 | FC_3==20 | FC_3==21 | FC_3==22), "L2"))

#

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3=="L1", 1))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3=="L2", 2))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3=="L3", 3))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3=="L4", 4))

datatest <- datatest %>%

mutate(FC_3 = replace(FC_3, FC_3=="L5", 5))

#

data$ForwardCitations_3 <- as.numeric(datatest$FC_3)-1

# now the last column should be a numeric between 0 and 4, representing the actual classes

##

# beginn with Multiclass Classifiation

# Transform data into a matrix without names

data <- as.matrix(data)

dimnames(data) <- NULL

# Normalize the input

data[, 1:12] <- normalize(data[,1:12])

# summary(data)

# Data partition

set.seed(1235)

ind <- sample(2, nrow(data), replace = T, prob = c(.8, .2))

training <- data[ind==1, 1:12]

test <- data[ind==2, 1:12]

trainingtarget <- data[ind==1, 13]

testtarget <- data[ind==2, 13]

# one Hot Encoding

trainLabels <- to_categorical(trainingtarget)

testlabels <- to_categorical(testtarget)

#Creating the sequential model

model <- keras_model_sequential()

model %>%

layer_dense(units=48, activation = 'relu', input_shape = c(12)) %>%

# layer_dropout(rate = 0.25) %>%

# layer_dense(units=128, activation = 'relu') %>%

# layer_dropout(rate = 0.16) %>%

# layer_dense(units=32, activation = 'relu') %>%

# layer_dropout(rate= 0.8) %>%

# layer_dense(units=16, activation = 'relu') %>%

# layer_dropout(rate= 0.8) %>%

# layer_dense(units=1024, activation = 'relu') %>%

layer_dense(units = 5, activation = 'softmax')

# Compile

model %>%

compile(loss = 'categorical_crossentropy',

optimizer = 'adam',

metrics = 'accuracy')

# Fit model

history <- model %>%

fit(training,

trainLabels,

epoch = 100,

batch_size = 32,

validation_split = .2

# class_weight = list("0"= 2.507864, "1" = 1.483357, "2"=1.016865, "3" = 1, "4" = 1.180681)

)

# plot(history)

I don´t know what the problem is, whether my code is actually doing what I´m trying to do and what I should try next.

PS: If you need further information I will be happy to provide it.