I am using the quip office system

I want to use selenium to export all the documentation,Encountered many problems.

1)waiting time, I only use time.sleep,often have problems

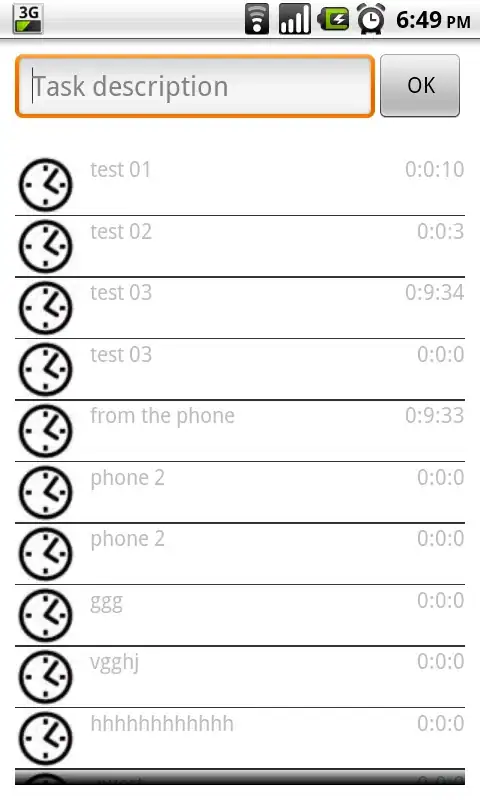

2)To load a document, I have encountered many document that need to be scrolled down

Like this folder contains a lot of documents, you need to scroll down to get the href

3)Because it is a human created folder, there may be a new folder under the folder and another folder under the new file.

I am a newbie, please tell me as much as possible.

A test account and password are provided in the code.

# -*- coding: utf-8 -*-

from selenium import webdriver

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import Select

from selenium.webdriver import ActionChains

import time

# Configuration information

email = "187069474@qq.com"

password = "Huangbo1019@"

def work_on():

driver = webdriver.Chrome('drivers/chromedriver72.exe')

index_url = "https://quip.com/"

driver.get(url=index_url)

def get_docs(docs):

for doc in docs:

driver.get(doc)

time.sleep(2)

driver.find_element_by_xpath('//*[@id="app"]/div/div/div/div[3]/div[1]/div[1]/div[1]/div[2]/button[1]').click() # select document

time.sleep(2)

ele = driver.find_element_by_xpath('//div[@class="parts-menu-label" and text()="Export"]') # Determine the position of the element

actions = ActionChains(driver)

actions.move_to_element(ele).perform()

time.sleep(2)

html = driver.find_element_by_xpath('//div[@class="parts-menu-label" and text()="HTML"]')

actions.move_to_element(html).click(html).perform()

time.sleep(5)

time.sleep(1)

driver.find_element_by_xpath('//*[@id="header-nav-collapse"]/ul/li[9]/a').click() # click login

time.sleep(1)

driver.find_element_by_xpath('/html/body/div[2]/div[1]/div[1]/form/div/input').send_keys(email) # input email

driver.find_element_by_xpath('//*[@id="email-submit"]').click()

time.sleep(1)

driver.find_element_by_xpath('/html/body/div/div/form/div/input[2]').send_keys(password) # input password

driver.find_element_by_xpath('/html/body/div/div/form/button').click()

time.sleep(2)

driver.find_element_by_xpath('//*[@id="app"]/div/div/div/div[1]/div/div/div[3]/div[1]/a[2]/div/div').click() # click file

time.sleep(5)

driver.find_element_by_xpath('//*[@id="app"]/div/div/div/div[3]/div[2]/div/div/div/div[1]/div[2]/div[1]/a').click() # select test

time.sleep(2)

docs = driver.find_elements_by_class_name('folder-document-thumbnail')

docs = [x.get_attribute('href') for x in docs]

folders = driver.find_elements_by_class_name('folder-thumbnail')

folders = [x.get_attribute('href') for x in folders]

get_docs(docs)

for folder in folders:

driver.get(folder)

time.sleep(2)

docs = driver.find_elements_by_class_name('folder-document-thumbnail')

docs = [x.get_attribute('href') for x in docs]

get_docs(docs)

time.sleep(5)

driver.close()

if __name__ == '__main__':

work_on()

The current code can only get the second-level directory folder.

Unable to capture all document links because the mouse cannot be swiped down

Waiting time is very painful, sometimes the network is not good will give an error

The quip provided are just tests, but there will be thousands of documents in the production process.

I hope who can improve this code. This is very helpful to me. I am truely thankful.