I want to do something which appears simple, but I don't have a good feel for R yet, it is a maze of twisty passages, all different.

I have a table with several variables, and I want to group on two variables ... I want a two-level hierarchical grouping, also known as a tree. This can evidently be done using the group_by function of dplyr.

And then I want to compute marginal statistics (in this case, relative frequencies) based on group counts for level 1 and level 2.

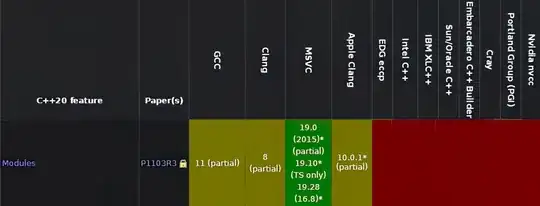

In pictures, given this table of 18 rows:

I want this table of 6 rows:

Is there a simple way to do this in dplyr? (I can do it in SQL, but ...)

Edited for example

For example, based on the nycflights13 package:

library(dplyr)

install.packages("nycflights13")

require(nycflights13)

data(flights) # contains information about flights, one flight per row

ff <- flights %>%

mutate(approx_dist = floor((distance + 999)/1000)*1000) %>%

select(carrier, approx_dist) %>%

group_by(carrier, approx_dist) %>%

summarise(n = n()) %>%

arrange(carrier, approx_dist)

This creates a tbl ff with the number of flights for each pair of (carrier, inter-airport-distance-rounded-to-1000s):

# A tibble: 33 x 3

# Groups: carrier [16]

carrier approx_dist n

<chr> <dbl> <int>

1 9E 1000 15740

2 9E 2000 2720

3 AA 1000 9146

4 AA 2000 17210

5 AA 3000 6373

And now I would like to compute the relative frequencies for the "approx_dist" values in each "carrier" group, for example, I would like to get:

carrier approx_dist n rel_freq

<chr> <dbl> <int>

1 9E 1000 15740 15740/(15740+2720)

2 9E 2000 2720 2720/(15740+2720)