Assume the following:

- Two images were overlayed over each other and merged into a single image (let's call it: image Y)

- I have one of the original images (let's call it: image A). This image is a half-transparent mask that was overlayed over the other image. But I don't know the mathematical operation. It was not just added but probably multiplied. Or maybe combined as an alpha composite.

How do I obtain the other original image (image B) given the other two images? All images are RGBA images.

I have tried to use PIL's PIL.ImageChops.difference() but this does not do the trick. The mathematical operation was not just a simple addition.

I also tried to play around with some code I found. But it certainly does not produce the right results.

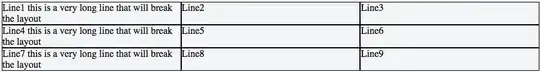

# split RGBA images into 4 channels

rA, gA, bA, aA = pil_im.split()

rB, gB, bB, aB = mask_img.split()

# divide each channel (image1/image2)

rTmp = ImageMath.eval("int(a/((float(b)+1)/256))", a=rA, b=rB).convert('L')

gTmp = ImageMath.eval("int(a/((float(b)+1)/256))", a=gA, b=gB).convert('L')

bTmp = ImageMath.eval("int(a/((float(b)+1)/256))", a=bA, b=bB).convert('L')

aTmp = ImageMath.eval("int(a/((float(b)+1)/256))", a=aA, b=aB).convert('L')

# merge channels into RGBA image

imgOut = Image.merge("RGBA", (rTmp, gTmp, bTmp, aTmp))

- I would like to either reverse a multiplication (by using something like a division) or reverse an alpha compositing. I assume that the problem can be solved. It must just be something like linear algebra with f(A,B) = Y. I have A and Y, but what is B?

- What is the correct name of my problem?