I am new to Tensorflow and wanted to understand the keras LSTM layer so I wrote this

test program to discern the behavior of the stateful option.

#Tensorflow 1.x version

import tensorflow as tf

import numpy as np

NUM_UNITS=1

NUM_TIME_STEPS=5

NUM_FEATURES=1

BATCH_SIZE=4

STATEFUL=True

STATEFUL_BETWEEN_BATCHES=True

lstm = tf.keras.layers.LSTM(units=NUM_UNITS, stateful=STATEFUL,

return_state=True, return_sequences=True,

batch_input_shape=(BATCH_SIZE, NUM_TIME_STEPS, NUM_FEATURES),

kernel_initializer='ones', bias_initializer='ones',

recurrent_initializer='ones')

x = tf.keras.Input((NUM_TIME_STEPS,NUM_FEATURES),batch_size=BATCH_SIZE)

result = lstm(x)

I = tf.compat.v1.global_variables_initializer()

sess = tf.compat.v1.Session()

sess.run(I)

X_input = np.array([[[3.14*(0.01)] for t in range(NUM_TIME_STEPS)] for b in range(BATCH_SIZE)])

feed_dict={x: X_input}

def matprint(run, mat):

print('Batch = ', run)

for b in range(mat.shape[0]):

print('Batch Sample:', b, ', per-timestep output')

print(mat[b].squeeze())

print('BATCH_SIZE = ', BATCH_SIZE, ', T = ', NUM_TIME_STEPS, ', stateful =', STATEFUL)

if STATEFUL:

print('STATEFUL_BETWEEN_BATCHES = ', STATEFUL_BETWEEN_BATCHES)

for r in range(2):

feed_dict={x: X_input}

OUTPUT_NEXTSTATES = sess.run({'result': result}, feed_dict=feed_dict)

OUTPUT = OUTPUT_NEXTSTATES['result'][0]

NEXT_STATES=OUTPUT_NEXTSTATES['result'][1:]

matprint(r,OUTPUT)

if STATEFUL:

if STATEFUL_BETWEEN_BATCHES:

#For TF version 1.x manually re-assigning states from

#the last batch IS required for some reason ...

#seems like a bug

sess.run(lstm.states[0].assign(NEXT_STATES[0]))

sess.run(lstm.states[1].assign(NEXT_STATES[1]))

else:

lstm.reset_states()

Note that the LSTM's weights are set to all ones and the input is constant for consistency.

As expected the script's output when statueful=False has no sample, time, or inter-batch

dependence:

BATCH_SIZE = 4 , T = 5 , stateful = False

Batch = 0

Batch Sample: 0 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 1 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 2 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 3 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch = 1

Batch Sample: 0 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 1 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 2 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 3 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Upon setting stateful=True I was expecting the samples within each batch to yield different outputs (

presumably because the TF graph maintains state between the batch samples). This was not the case, however:

BATCH_SIZE = 4 , T = 5 , stateful = True

STATEFUL_BETWEEN_BATCHES = True

Batch = 0

Batch Sample: 0 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 1 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 2 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 3 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch = 1

Batch Sample: 0 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 1 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 2 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 3 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

In particular, note the outputs from the first two samples of the same batch are identical.

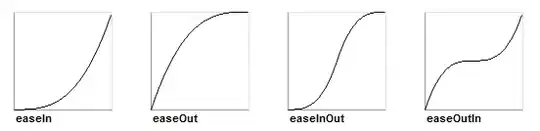

EDIT: I have been informed by OverlordGoldDragon

that this behavior is expected and my confusion is in the distinction between a Batch -- a collection of

(samples, timesteps, features) -- and Sample within a batch (or a single "row" of the batch).

Represented by the following figure:

So this raises the question of the dependence (if any) between individual samples for a given batch. From the output of my script, I'm led to believe that each sample is fed to a (logically) separate LSTM block -- and the LSTM states for the difference samples are independent. I've drawn this here:

Is my understanding correct?

As an aside, it seems the stateful=True is broken in TensorFlow 1.x because if I remove the explicit

assignment of the state from the previous batch:

sess.run(lstm.states[0].assign(NEXT_STATES[0]))

sess.run(lstm.states[1].assign(NEXT_STATES[1]))

it stops working, i.e., the second batch's output is identical to the first's.

I re-wrote the above script with the Tensorflow 2.0 syntax and the behavior is what I would expect (without having to manually carry over LSTM state between batches):

#Tensorflow 2.0 implementation

import tensorflow as tf

import numpy as np

NUM_UNITS=1

NUM_TIME_STEPS=5

NUM_FEATURES=1

BATCH_SIZE=4

STATEFUL=True

STATEFUL_BETWEEN_BATCHES=True

lstm = tf.keras.layers.LSTM(units=NUM_UNITS, stateful=STATEFUL,

return_state=True, return_sequences=True,

batch_input_shape=(BATCH_SIZE, NUM_TIME_STEPS, NUM_FEATURES),

kernel_initializer='ones', bias_initializer='ones',

recurrent_initializer='ones')

X_input = np.array([[[3.14*(0.01)]

for t in range(NUM_TIME_STEPS)]

for b in range(BATCH_SIZE)])

@tf.function

def forward(x):

return lstm(x)

def matprint(run, mat):

print('Batch = ', run)

for b in range(mat.shape[0]):

print('Batch Sample:', b, ', per-timestep output')

print(mat[b].squeeze())

print('BATCH_SIZE = ', BATCH_SIZE, ', T = ', NUM_TIME_STEPS, ', stateful =', STATEFUL)

if STATEFUL:

print('STATEFUL_BETWEEN_BATCHES = ', STATEFUL_BETWEEN_BATCHES)

for r in range(2):

OUTPUT_NEXTSTATES = forward(X_input)

OUTPUT = OUTPUT_NEXTSTATES[0].numpy()

NEXT_STATES=OUTPUT_NEXTSTATES[1:]

matprint(r,OUTPUT)

if STATEFUL:

if STATEFUL_BETWEEN_BATCHES:

pass

#Explicitly re-assigning states from the last batch isn't

# required as the model maintains inter-batch history.

#This is NOT the same behavior for TF.version < 2.0

#lstm.states[0].assign(NEXT_STATES[0].numpy())

#lstm.states[1].assign(NEXT_STATES[1].numpy())

else:

lstm.reset_states()

This is the output:

BATCH_SIZE = 4 , T = 5 , stateful = True

STATEFUL_BETWEEN_BATCHES = True

Batch = 0

Batch Sample: 0 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 1 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 2 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch Sample: 3 , per-timestep output

[0.38041887 0.663519 0.79821336 0.84627265 0.8617684 ]

Batch = 1

Batch Sample: 0 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 1 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 2 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]

Batch Sample: 3 , per-timestep output

[0.86686385 0.8686781 0.8693927 0.8697042 0.869853 ]