We can use a relative of split (the by function), but not in the way you think.

First, for a reproducible answer, I'll generate an XLSX:

wb <- openxlsx::createWorkbook()

openxlsx::addWorksheet(wb, "MySheet")

openxlsx::writeData(wb, 1, data.frame(x=c("TABLE", "DATE", "Amount"), y=c("1", "2/15/18", "36")),

startCol=1, startRow=1, colNames=FALSE, rowNames=FALSE)

openxlsx::writeData(wb, 1, mtcars[1:3,1:5],

startCol=1, startRow=4, colNames=TRUE, rowNames=FALSE)

openxlsx::writeData(wb, 1, data.frame(x=c("TABLE", "DATE", "Amount"), y=c("2", "5/20/18", "25")),

startCol=1, startRow=9, colNames=FALSE, rowNames=FALSE)

openxlsx::writeData(wb, 1, mtcars[11:13,1:5],

startCol=1, startRow=12, colNames=TRUE, rowNames=FALSE)

openxlsx::saveWorkbook(wb, "quux.xlsx")

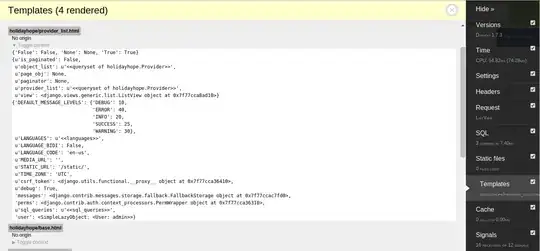

Which generates a single worksheet with the following:

From here, I'll first read in the whole page, knowing that this will be "not usable the way we want":

my.file <- openxlsx::read.xlsx("quux.xlsx", skipEmptyRows = FALSE, colNames = FALSE)

my.file

# X1 X2 X3 X4 X5

# 1 TABLE 1 <NA> <NA> <NA>

# 2 DATE 2/15/18 <NA> <NA> <NA>

# 3 Amount 36 <NA> <NA> <NA>

# 4 mpg cyl disp hp drat

# 5 21 6 160 110 3.9

# 6 21 6 160 110 3.9

# 7 22.8 4 108 93 3.85

# 8 <NA> <NA> <NA> <NA> <NA>

# 9 TABLE 2 <NA> <NA> <NA>

# 10 DATE 5/20/18 <NA> <NA> <NA>

# 11 Amount 25 <NA> <NA> <NA>

# 12 mpg cyl disp hp drat

# 13 17.8 6 167.6 123 3.92

# 14 16.4 8 275.8 180 3.07

# 15 17.3 8 275.8 180 3.07

(I printed out the whole table, because (1) I wanted to demonstrate the table groups, and (2) because I know it's not huge. In your case, it will likely be rather large, so feel free to practice judicious use of head or related views on your larger frame.)

What we're going to do is use the first column (X1) of this wrong-full-frame and find which blocks of rows start with a known header. I'm using the first one, but really any known column can be used to determine when a "real data table" starts post-header.

cumsum(is.na(my.file[[1]]) | my.file[[1]] == "mpg")

# [1] 0 0 0 1 1 1 1 2 2 2 2 3 3 3 3

This tells us that rows 4-7 of this wrong-full-frame are likely grouped together. It also thinks that rows 1-3 are grouped, but that's because every row is in a group, whether a usable-group or not-usable-group. Because of this, when we grab the data for real, we'll need to check if the group we're working on is "usable" or "not-usable" based on whether the known column name is present.

From here, we're going to repeatedly read from the file based on the group type and location.

alltables <- by(

seq_len(nrow(my.file)),

cumsum(is.na(my.file[[1]]) | my.file[[1]] == "mpg"),

function(ind) {

v <- my.file[[1]][ind[1]]

if (!is.na(v) && v == "mpg")

openxlsx::read.xlsx("quux.xlsx", rows=ind)

})

alltables <- Filter(Negate(is.null), alltables)

alltables

# $`1`

# mpg cyl disp hp drat

# 1 21.0 6 160 110 3.90

# 2 21.0 6 160 110 3.90

# 3 22.8 4 108 93 3.85

# $`3`

# mpg cyl disp hp drat

# 1 17.8 6 167.6 123 3.92

# 2 16.4 8 275.8 180 3.07

# 3 17.3 8 275.8 180 3.07

While we take a performance hit for repeatedly reading the xlsx file, this ensures that we get the full gamut of formatting and classification of columns; had we extracted it directly from my.file, everything would likely have been character, and we would need to do all class conversions manually. (I wish there were an easy way to openxlsx::loadWorkbook and repeatedly read from the same handle.)

In your example, you have a summary row. Since it does not have anything in the first column, it is interpreted in my above code as "an empty row" and will not be imported into the frames.

If you really want this row, then you might need to include more logic into the cumsum to locate the additional row; or you can include the first row after each group, assuming that there will be something usable. Note that in the latter case here, you'll be responsible for fixing NA values ... and frankly, storing summary statistics in the same frame as the data is not really a "best practice".

If you get these extra rows and don't want them, you can choose to filter them post-read, or you can selectively remove the last row from ind based on criteria specific to your data.

Follow-up: you didn't ask, but there are three things you can now do with allframes:

If they are all the same format (column names), and ultimately they need to be grouped together, then you can combine them into one frame with one of the following, depending on packages you may be using:

- (base R)

do.call(rbind, alltables)

dplyr::bind_rows(alltables)data.table::rbindlist(alltables)

Note that the latter two will take more effort to align columns if some tables have extra/missing columns and/or columns in a different order; the base-R version is not tolerant of any column-differences.

If they are all the same format but you need to keep them independent, then I recommend you keep them in this list: it is highly likely that when you do something to one frame you will be doing the identical task to all other frames in the list, so it is recommended that you do this "thing" within lapply. (See How do I make a list of data frames?).

You can assign them to individual frames within the environment. (If they are the same format, then I strongly urge #1 or #2 above. If they are different formats, then this one might really make sense.)