I'm trying to build a "multi-headed CNN model" so each head is a branch taking in individual multi variant time series Data.

What is not clear to me how to handle the "fit" method or in other words how to properly prepare the y_train. There are 2 classes 0 and 1 for the label

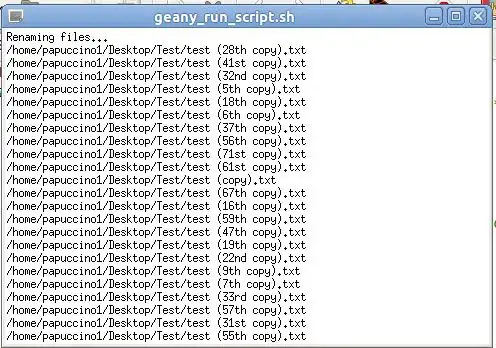

The current architecture is as shown here.

the goal is to predict one time_step ahead

input shapes are:

A Training Data (1, 903155, 5)

B Training Data (1, 903116, 5)

C Training Data (1, 902996, 5)

label shapes:

y_train (903155, 1)

when doing:

history = model.fit(x = [A,B,C], y = y_in)

than i get:

Input arrays should have the same number of samples as target arrays. Found 1 input samples and 903155 target samples.

reshaping y_in to (1, 903155) results in:

expected dense_5 (see image) to have shape (1,) but got array with shape (903155,)

strange is that model.predict([A,B,C]) yield results