I am trying to find the average time complexity for the Fibonacci series using mathematical approach.

The best case is O(1), while the worst case is O(2^n). The Average case can be found by dividing the sum of times of all cases by the number of cases.

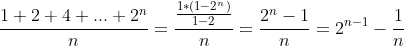

So, I believe that the average case can be found like this(using the sum of geometric series):

Thus, it should be still O(2^n) . Can you please tell me if my calculations and approach are correct?

The sample code for the Fibonacci() is shown below.

def Fibonacci(n):

if n==1:

return 0

elif n==2:

return 1

else:

return Fibonacci(n-1)+Fibonacci(n-2)