I'm not sure how to modify my code to get keras activations. I've seen conflicting examples of K.function() inputs and am not sure if I'm getting outputs per layer our activations.

Here is my code

activity = 'Downstairs'

layer = 1

seg_x = create_segments_and_labels(df[df['ActivityEncoded']==mapping[activity]],TIME_PERIODS,STEP_DISTANCE,LABEL)[0]

get_layer_output = K.function([model_m.layers[0].input],[model_m.layers[layer].output])

layer_output = get_layer_output([seg_x])[0]

try:

ax = sns.heatmap(layer_output[0].transpose(),cbar=True,cbar_kws={'label':'Activation'})

except:

ax = sns.heatmap(layer_output.transpose(),cbar=True,cbar_kws={'label':'Activation','rotate':180})

ax.set_xlabel('Kernel',fontsize=30)

ax.set_yticks(range(0,len(layer_output[0][0])+1,10))

ax.set_yticklabels(range(0,len(layer_output[0][0])+1,10))

ax.set_xticks(range(0,len(layer_output[0])+1,5))

ax.set_xticklabels(range(0,len(layer_output[0])+1,5))

ax.set_ylabel('Filter',fontsize=30)

ax.xaxis.labelpad = 10

ax.set_title('Filter vs. Kernel\n(Layer=' + model_m.layers[layer].name + ')(Activity=' + activity + ')',fontsize=35)

Suggestions here on stack overflow just do it as I do: Keras, How to get the output of each layer?

Example 4 adds k's learning phase to the mix but my output is still the same. https://www.programcreek.com/python/example/93732/keras.backend.function

Am I getting output or activations? Documentation implies i might need layers.activations but I haven't made that work.

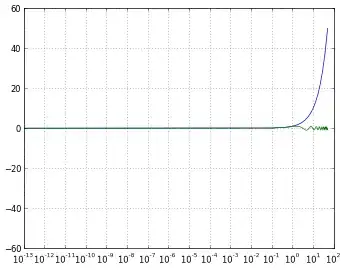

My code, or the code passing in learning phase both get this heatmap. https://i.stack.imgur.com/1d3vB.jpg