I have a dataframe like as given below

df = pd.DataFrame({

'subject_id':[1,1,1,1,2,2,2,2,3,3,4,4,4,4,4],

'readings' : ['READ_1','READ_2','READ_1','READ_3','READ_1','READ_5','READ_6','READ_8','READ_10','READ_12','READ_11','READ_14','READ_09','READ_08','READ_07'],

'val' :[5,6,7,11,5,7,16,12,13,56,32,13,45,43,46],

})

What I would like to do is create multiple dataframes out of this (df1,df2). In real time it doesn't have to be two. It can be 10 or 20 depending on my data size. I ask this because i intend to do parallel processing. I will divide my one huge df to multiple small dataframes and do parallel processing

for example df1 should contain all the records of 2(two) subjects and df2 should contain all the records of remaining 2 subjects

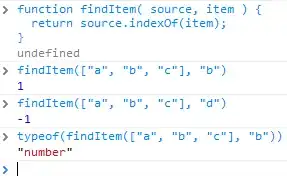

Tried this but it isn't right

grouped = df.groupby('subject_id')

df1 = grouped.filter(lambda x: x['subject_id']== 2)

I expect my output to be like this

df1 - contains all records of 2 subjects. In real time, I wish to select 100 subjects and would like to have all their records in one dataframe

df2 - contains all records of 2 subjects. But in real time, I wish to select 100 subjects and have all their records in one dataframe

As you can see there is clear segregation of data based on subjects and no presence of data of a subject in multiple dataframe. Like subject_id = 1 has data only in df1

updated post