I am working on regression problem and i used ad-boost with decision tree for regression and r^2 as evaluation measure. I want to know how much difference between training r^2 and testing r^2 is considered suitable. I have training r^2 is 0.9438 and testing r^2 is 0.877. Is it over-fitting or good?.I just want to know exactly how much difference between training and testing is acceptable or suitable?.

1 Answers

There are several issues with your question.

To start with, r^2 is certainly not recommended as a performance evaluation measure for predictive problems; quoting from my own answer in another SO thread:

the whole R-squared concept comes in fact directly from the world of statistics, where the emphasis is on interpretative models, and it has little use in machine learning contexts, where the emphasis is clearly on predictive models; at least AFAIK, and beyond some very introductory courses, I have never (I mean never...) seen a predictive modeling problem where the R-squared is used for any kind of performance assessment; neither it's an accident that popular machine learning introductions, such as Andrew Ng's Machine Learning at Coursera, do not even bother to mention it. And, as noted in the Github thread above (emphasis added):

In particular when using a test set, it's a bit unclear to me what the R^2 means.

with which I certainly concur.

Second:

I have training r^2 is 0.9438 and testing r^2 is 0.877. Is it over-fitting or good?

A difference between a training and a test score by itself does not signify overfitting. This is just the generalization gap, i.e. the expected gap in the performance between the training and validation sets; quoting from a recent blog post by Google AI:

An important concept for understanding generalization is the generalization gap, i.e., the difference between a model’s performance on training data and its performance on unseen data drawn from the same distribution.

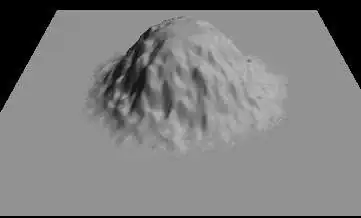

The telltale signature of overfitting is when your validation loss starts increasing, while your training loss continues decreasing, i.e.:

(image adapted from the Wikipedia entry on overfitting - diferent things may lie in the horizontal axis, e.g. here the number of boosted trees)

I just want to know exactly how much difference between training and testing is acceptable or suitable?

There is no general answer to this question; everything depends on the details of your data and the business problem you are trying to solve.

- 1

- 1

- 57,590

- 26

- 140

- 166