I am using matplotlib as part of a Python application to analyze some data. My goal is to loop over a container of objects and, for each of those, produce a plot of the contained data. Here it is a list of the used versions:

- Python 3.6.9

- matplotlib 3.1.1

- numpy 1.15.4

PROBLEM

The problem I am experiencing is that the memory consumed by the Python application grows significantly during the loop that generates the graphs, to a point where the computer becomes almost unresponsive.

I have simplified the implementation into simple code that can be run to reproduce the behavior (this was inspired by a post I found on matplotlib github while searching for some answers on the web)

import logging

import matplotlib as mpl

import matplotlib.pyplot as plt

from memory_profiler import profile

from memory_profiler import memory_usage

import numpy as np

TIMES = 20

SAMPLES_PER_LINE = 20000

STATIONS = 100

np_ax = np.linspace(1, STATIONS, num=SAMPLES_PER_LINE)

np_ay = np.random.random_sample(size=SAMPLES_PER_LINE)

logging.basicConfig(level="INFO")

@profile

def do_plots_close(i):

fig = plt.figure(figsize=(16, 10), dpi=60)

for stn in range(STATIONS):

plt.plot(np_ax, np_ay + stn)

s_file_name = 'withClose_{:03d}.png'.format(i)

plt.savefig(s_file_name)

logging.info("Printed file %s", s_file_name)

plt.close(fig)

@profile

def do_plots_clf(i):

plt.clf()

for stn in range(STATIONS):

plt.plot(np_ax, np_ay + stn)

s_file_name = "withCLF_{:03d}.png".format(i)

plt.savefig(s_file_name)

logging.info("Printed file %s", s_file_name)

def with_close():

for i in range(TIMES):

do_plots_close(i)

def with_clf():

fig = plt.figure(figsize=(16, 10), dpi=60)

for i in range(TIMES):

do_plots_clf(i)

plt.close(fig)

if __name__ == "__main__":

logging.info("Matplotlib backend used: %s", mpl.get_backend())

mem_with_close = memory_usage((with_close, [], {}))

mem_with_clf = memory_usage((with_clf, [], {}))

plt.plot(mem_with_close, label='New figure opened and closed at each loop')

plt.plot(mem_with_clf, label='Single figure cleared at every loop')

plt.legend()

plt.title("Backend: {:s} - memory usage".format(mpl.get_backend()))

plt.ylabel('MB')

plt.grid()

plt.xlabel('time [s * 0.1]') # `memory_usage` logs every 100ms

plt.show()

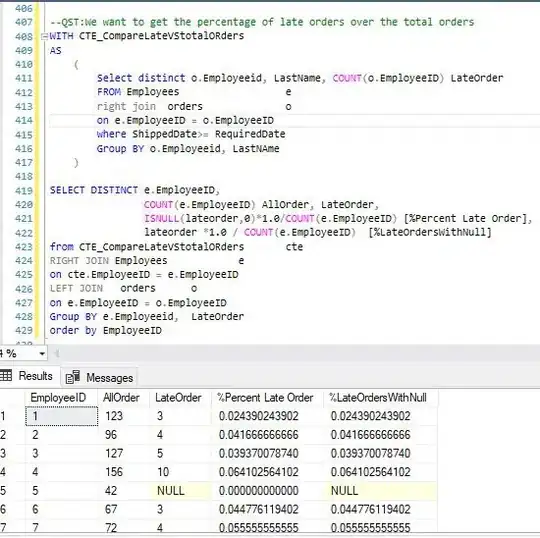

There are 2 functions that plot TIMES graphs each with SAMPLES lines with SAMPLES_PER_LINE elements (somewhat close to the size of the data I am dealing with). do_plots_close() creates a new figure at the beginning of each loop and closes it at the end; whereas do_plots_clf() operates on the same figure after that a cleanup is performed.

memory_profiler is used to get the memory consumed by the 2 functions, which is then plotted into a figure that is attached:

Looking at the figure, it is clear that in neither of the functions the memory consumed by a single figure is completely freed after the figure is closed (for do_plots_close()) or cleared (for do_plots_clf()). I have also tried to remove the figsize option when the figure is created, but I did not see any difference in the outcome.

QUESTION

I am looking for a better way to handle this problem and reduce the amount of memory that is used in the function saving the graphs.

- Am I misusing the matplotlib API?

- Is there a way to manage the same amount of data without suffering this memory increase?

- Why the memory seems to be released only after several plots are done?

Any help and suggestions is greatly appreciated.

Thank you.