I'm trying to set up a Redis cluster and I followed this guide here: https://rancher.com/blog/2019/deploying-redis-cluster/

Basically I'm creating a StatefulSet with a replica 6, so that I can have 3 master nodes and 3 slave nodes. After all the nodes are up, I create the cluster, and it all works fine... but if I look into the file "nodes.conf" (where the configuration of all the nodes should be saved) of each redis node, I can see it's empty. This is a problem, because whenever a redis node gets restarted, it searches into that file for the configuration of the node to update the IP address of itself and MEET the other nodes, but he finds nothing, so it basically starts a new cluster on his own, with a new ID.

My storage is an NFS connected shared folder. The YAML responsible for the storage access is this one:

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-provisioner-raid5

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner-raid5

spec:

serviceAccountName: nfs-provisioner-raid5

containers:

- name: nfs-provisioner-raid5

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-raid5-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: 'nfs.raid5'

- name: NFS_SERVER

value: 10.29.10.100

- name: NFS_PATH

value: /raid5

volumes:

- name: nfs-raid5-root

nfs:

server: 10.29.10.100

path: /raid5

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner-raid5

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs.raid5

provisioner: nfs.raid5

parameters:

archiveOnDelete: "false"

This is the YAML of the redis cluster StatefulSet:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

labels:

app: redis-cluster

spec:

serviceName: redis-cluster

replicas: 6

selector:

matchLabels:

app: redis-cluster

template:

metadata:

labels:

app: redis-cluster

spec:

containers:

- name: redis

image: redis:5-alpine

ports:

- containerPort: 6379

name: client

- containerPort: 16379

name: gossip

command: ["/conf/fix-ip.sh", "redis-server", "/conf/redis.conf"]

readinessProbe:

exec:

command:

- sh

- -c

- "redis-cli -h $(hostname) ping"

initialDelaySeconds: 15

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "redis-cli -h $(hostname) ping"

initialDelaySeconds: 20

periodSeconds: 3

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: conf

mountPath: /conf

readOnly: false

- name: data

mountPath: /data

readOnly: false

volumes:

- name: conf

configMap:

name: redis-cluster

defaultMode: 0755

volumeClaimTemplates:

- metadata:

name: data

labels:

name: redis-cluster

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: nfs.raid5

resources:

requests:

storage: 1Gi

This is the configMap:

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-cluster

labels:

app: redis-cluster

data:

fix-ip.sh: |

#!/bin/sh

CLUSTER_CONFIG="/data/nodes.conf"

echo "creating nodes"

if [ -f ${CLUSTER_CONFIG} ]; then

if [ -z "${POD_IP}" ]; then

echo "Unable to determine Pod IP address!"

exit 1

fi

echo "Updating my IP to ${POD_IP} in ${CLUSTER_CONFIG}"

sed -i.bak -e "/myself/ s/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/${POD_IP}/" ${CLUSTER_CONFIG}

echo "done"

fi

exec "$@"

redis.conf: |+

cluster-enabled yes

cluster-require-full-coverage no

cluster-node-timeout 15000

cluster-config-file /data/nodes.conf

cluster-migration-barrier 1

appendonly yes

protected-mode no

and I created the cluster using the command:

kubectl exec -it redis-cluster-0 -- redis-cli --cluster create --cluster-replicas 1 $(kubectl get pods -l app=redis-cluster -o jsonpath='{range.items[*]}{.status.podIP}:6379 ')

what am I doing wrong?

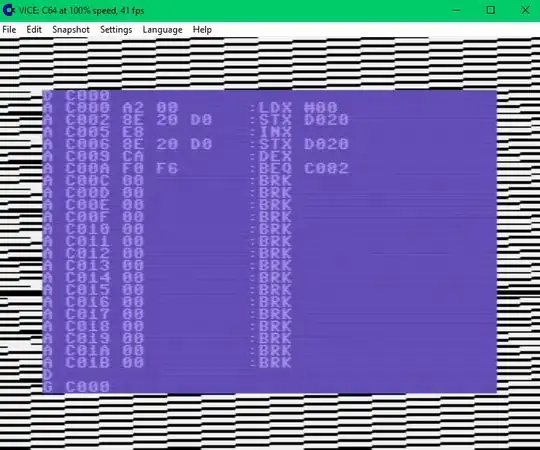

this is what I see into the /data folder:

the nodes.conf file shows 0 bytes.

Lastly, this is the log from the redis-cluster-0 pod:

creating nodes

1:C 07 Nov 2019 13:01:31.166 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 07 Nov 2019 13:01:31.166 # Redis version=5.0.4, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 07 Nov 2019 13:01:31.166 # Configuration loaded

1:M 07 Nov 2019 13:01:31.179 * No cluster configuration found, I'm e55801f9b5d52f4e599fe9dba5a0a1e8dde2cdcb

1:M 07 Nov 2019 13:01:31.182 * Running mode=cluster, port=6379.

1:M 07 Nov 2019 13:01:31.182 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 07 Nov 2019 13:01:31.182 # Server initialized

1:M 07 Nov 2019 13:01:31.182 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 07 Nov 2019 13:01:31.185 * Ready to accept connections

1:M 07 Nov 2019 13:08:04.264 # configEpoch set to 1 via CLUSTER SET-CONFIG-EPOCH

1:M 07 Nov 2019 13:08:04.306 # IP address for this node updated to 10.40.0.27

1:M 07 Nov 2019 13:08:09.216 # Cluster state changed: ok

1:M 07 Nov 2019 13:08:10.144 * Replica 10.44.0.14:6379 asks for synchronization

1:M 07 Nov 2019 13:08:10.144 * Partial resynchronization not accepted: Replication ID mismatch (Replica asked for '27972faeb07fe922f1ab581cac0fe467c85c3efd', my replication IDs are '31944091ef93e3f7c004908e3ff3114fd733ea6a' and '0000000000000000000000000000000000000000')

1:M 07 Nov 2019 13:08:10.144 * Starting BGSAVE for SYNC with target: disk

1:M 07 Nov 2019 13:08:10.144 * Background saving started by pid 1041

1041:C 07 Nov 2019 13:08:10.161 * DB saved on disk

1041:C 07 Nov 2019 13:08:10.161 * RDB: 0 MB of memory used by copy-on-write

1:M 07 Nov 2019 13:08:10.233 * Background saving terminated with success

1:M 07 Nov 2019 13:08:10.243 * Synchronization with replica 10.44.0.14:6379 succeeded

thank you for the help.