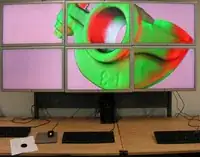

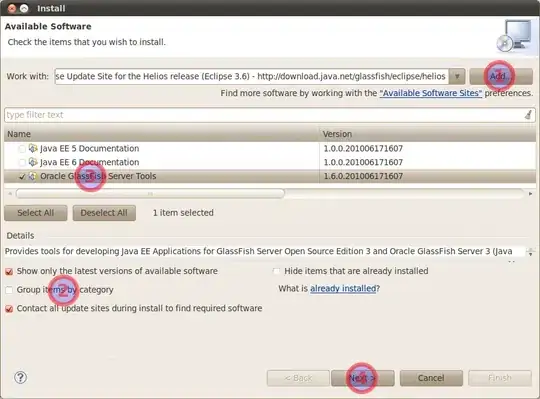

If I understand correctly, you want to remove the background and extract the object. Instead of using Canny, here's an alternative approach. Since you didn't provide an original image, I screenshotted your image to use as input. In general, there are several ways to obtain a binary image for boundary extraction. They include regular thresholding, Otsu's thresholding, Adaptive thresholding, and Canny edge detection. In this case, Otsu's is probably the best since there is background noise

First we convert the image to grayscale then perform Otsu's threshold to obtain a binary image

There are unwanted sections so to remove them, we perform a morph open to separate the joints

Now that the joints are separated, we find contours and filter using contour area. We extract the largest contour which represents the desired object then draw this contour onto a mask

We're almost there but there are imperfections so we morph close to fill the holes

Next we bitwise-and with the original image

Finally to get the desired result, we color in all black pixels on the mask to white

From here you could use Numpy slicing to extract the ROI but I'm not completely sure what you were trying to do. I'll leave that up to you

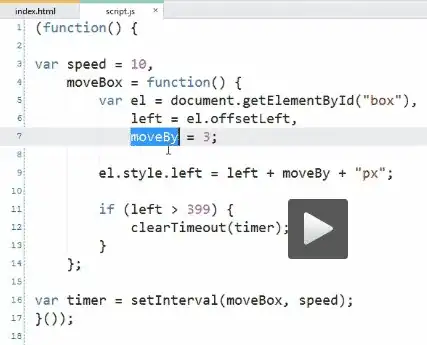

import cv2

import numpy as np

image = cv2.imread("1.png")

original = image.copy()

mask = np.zeros(image.shape, dtype=np.uint8)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1]

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5,5))

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=3)

cnts = cv2.findContours(opening, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

cnts = sorted(cnts, key=cv2.contourArea, reverse=True)

for c in cnts:

cv2.drawContours(mask, [c], -1, (255,255,255), -1)

break

close = cv2.morphologyEx(mask, cv2.MORPH_CLOSE, kernel, iterations=4)

close = cv2.cvtColor(close, cv2.COLOR_BGR2GRAY)

result = cv2.bitwise_and(original, original, mask=close)

result[close==0] = (255,255,255)

cv2.imshow('result', result)

cv2.waitKey()