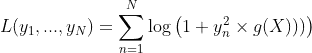

I have a dataset containing a matrix of features X and a matrix of labels y of size N where each element y_i belongs to [0,1]. I have the following loss function

where g(.) is a function that depends on the input matrix X.

I know that Keras custom loss function has to be of the form customLoss(y_true,y_predicted), however, I'm having difficulties incorporating the term g(X) in the loss function since this depends on the input matrix.

For each data point in my dataset, my input is of the form X_i = (H, P) where these two parameters are matrices and the function g is defined for each data point as g(X_i) = H x P. Can I pass a = (H, P) in the loss function since this depends on each example or do I need to pass all the matrices at once by concatenating them?

Edit (based on Daniel's answer):

original_model_inputs = keras.layers.Input(shape=X_train.shape[1])

y_true_inputs = keras.layers.Input(shape=y_train.shape[1])

hidden1 = keras.layers.Dense(256, activation="relu")(original_model_inputs)

hidden2 = keras.layers.Dense(128, activation="relu")(hidden1)

output = keras.layers.Dense(K)(hidden2)

def lambdaLoss(x):

yTrue, yPred, alpha = x

return (K.log(yTrue) - K.log(yPred))**2+alpha*yPred

loss = Lambda(lambdaLoss)(y_true_inputs, output, a)

model = Keras.Model(inputs=[original_model_inputs, y_true_inputs], outputs=[output], loss)

def dummyLoss(true, pred):

return pred

model.compile(loss = dummyLoss, optimizer=Adam())

train_model = model.fit([X_train, y_train], None, batch_size = 32,

epochs = 50,

validation_data = ([X_valid, y_valid], None),

callbacks=callbacks)