I have a binary classification problem where for each data point I have 3 time-series as follows.

data_point, time_series1, time_series2, time_series3, label

d1, [0.1, ....., 0.5], [0.8, ....., 0.6], [0.8, ....., 0.8], 1

and so on

I am using the following code to perform my binary classification.

model = Sequential()

model.add(LSTM(100, input_shape=(25,4)))

model.add(Dense(50))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

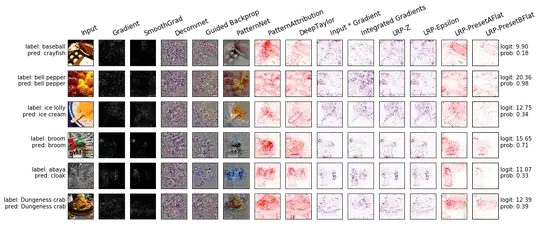

Since, currently I am considering my classification as a black-box task, I would like to dig deeper and see what happens inside.

More specifically, I would like to know the imporatant features used by LSTM to classify my datapoints. More importantly I want to answer the following questions;

- Which time series (i.e.

time_series1,time_series2,time_series3) was most influenced in the classification - What are features extracted from the most influenced timeseries?

I am happy to provide more details if needed.