TL; DR: The answer by Caius Jard is correct - you can join on anything, as long as it evaluates to true or false (ignoring unknown).

Unfortunately, the way you join between two tables can have drastically different performance depending on your methodology. If you join on an expression, you will usually get very poor performance. Using computed columns, materializing the intermediate result in a table, or splitting up your join conditions can all help with poor performance.

Joins are not the only place where expressions can ding you; grouping, aggregates, filters, or anything that relies on a good cardinality estimate will suffer when expressions are involved.

When I compare two methods of joining (they are functionally equivalent despite the new magic column; more on that later)

SELECT *

FROM #Build AppBuild

LEFT OUTER JOIN #Job Job

ON ( AppBuild.Name = Job.DATA_PROJECT_NAME

AND Job.DATA_PROJECT_NAME NOT LIKE 'BTG -%' )

OR ( Job.DATA_PROJECT_NAME LIKE 'BTG -%'

AND Job.JOB_DESCRIPTION = AppBuild.Name );

SELECT *

FROM #Build AppBuild

LEFT OUTER JOIN #Job Job

ON AppBuild.Name = Job.JoinOnMe;

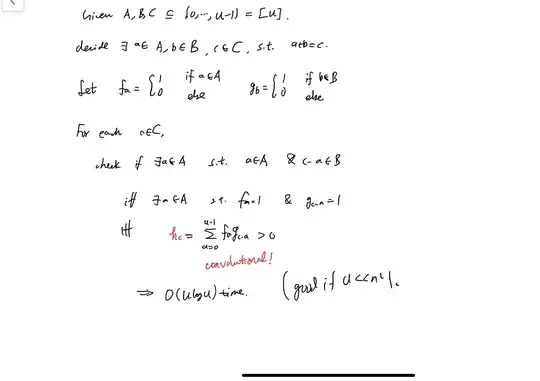

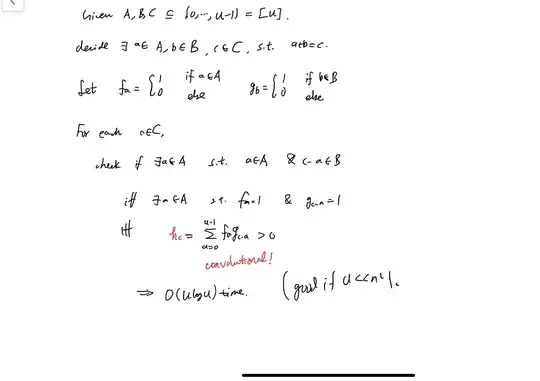

The resulting query plans have huge differences:

You'll notice that the estimated cost of the first join is much higher - but that doesn't even tell the whole story. If I actually run these two queries with ~6M rows in each table, I end up with the second one finishing in ~7 seconds, and the first one being nowhere near done after 2 minutes. This is because the join predicate was pushed down onto the #Job table's table scan:

SQL Server has no idea what percentage of records will have a DATA_PROJECT_NAME (NOT) LIKE 'BTG -%', so it picks an estimate of 1 row. This then leads it to pick a nested loop join, and a sort, and a spool, all of which really end up making things perform quite poorly for us when we get far more than 1 row out of that table scan.

The fix? Computed columns. I created my tables like so:

CREATE TABLE #Build

(

Name varchar(50) COLLATE DATABASE_DEFAULT NOT NULL

);

CREATE TABLE #Job

(

JOB_DESCRIPTION varchar(50) COLLATE DATABASE_DEFAULT NOT NULL,

DATA_PROJECT_NAME varchar(50) COLLATE DATABASE_DEFAULT NOT NULL,

JoinOnMe AS CASE WHEN DATA_PROJECT_NAME LIKE N'BTG -%' THEN DATA_PROJECT_NAME

ELSE JOB_DESCRIPTION END

);

It turns out that SQL Server will maintain statistics on JoinOnMe, even though there is an expression inside of it and this value has not been materialized anywhere. If you wanted to, you could even index the computed column.

Because we have statistics on JoinOnMe, a join on it will give a good cardinality estimate (when I tested it was exactly correct), and thus a good plan.

If you don't have the freedom to alter the table, then you should at least split the join into two joins. It may seem counter intuitive, but if you're ORing together a lot of conditions for an outer join, SQL Server will usually get a better estimate (and thus better plans) if each OR condition is separate, and you then COALESCE the result set.

When I include a query like this:

SELECT AppBuild.Name,

COALESCE( Job.JOB_DESCRIPTION, Job2.JOB_DESCRIPTION ) JOB_DESCRIPTION,

COALESCE( Job.DATA_PROJECT_NAME, Job2.DATA_PROJECT_NAME ) DATA_PROJECT_NAME

FROM #Build AppBuild

LEFT OUTER JOIN #Job Job

ON ( AppBuild.Name = Job.DATA_PROJECT_NAME

AND Job.DATA_PROJECT_NAME NOT LIKE 'BTG -%' )

LEFT OUTER JOIN #Job Job2

ON ( Job2.DATA_PROJECT_NAME LIKE 'BTG -%'

AND Job2.JOB_DESCRIPTION = AppBuild.Name );

It is also 0% of total cost, relative to the first query. When compared against joining on the computed column, the difference is about 58%/42%

Here is how I created the tables and populated them with test data

DROP TABLE IF EXISTS #Build;

DROP TABLE IF EXISTS #Job;

CREATE TABLE #Build

(

Name varchar(50) COLLATE DATABASE_DEFAULT NOT NULL

);

CREATE TABLE #Job

(

JOB_DESCRIPTION varchar(50) COLLATE DATABASE_DEFAULT NOT NULL,

DATA_PROJECT_NAME varchar(50) COLLATE DATABASE_DEFAULT NOT NULL,

JoinOnMe AS CASE WHEN DATA_PROJECT_NAME LIKE N'BTG -%' THEN DATA_PROJECT_NAME

ELSE JOB_DESCRIPTION END

);

INSERT INTO #Build

( Name )

SELECT ROW_NUMBER() OVER ( ORDER BY ( SELECT NULL ))

FROM master.dbo.spt_values

CROSS APPLY master.dbo.spt_values SV2;

INSERT INTO #Job

( JOB_DESCRIPTION, DATA_PROJECT_NAME )

SELECT ROW_NUMBER() OVER ( ORDER BY ( SELECT NULL )),

CASE WHEN ROUND( RAND(), 0 ) = 1 THEN CAST(ROW_NUMBER() OVER ( ORDER BY ( SELECT NULL )) AS nvarchar(20))

ELSE 'BTG -1' END

FROM master.dbo.spt_values SV

CROSS APPLY master.dbo.spt_values SV2;