I have a text file with 6 columns and 200 million rows and none of them is unique. I'd like to import them into a table in SQL Server and want to define an Identity column as primary key.

Therefore I created the following table first:

CREATE TABLE dbo.Inventory

(

ProductID NUMERIC(18,3) NOT NULL,

RegionID NUMERIC(18,3) NULL,

ShopCode INT NULL,

QTY FLOAT NULL,

OLAPDate VARCHAR(6) NULL,

R Float NULL,

ID BIGINT NOT NULL PRIMARY KEY IDENTITY(1,1)

)

Then I use the below command for importing the text file into the table:

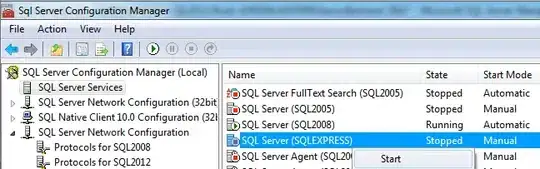

bcp ETLDB.dbo.Inventory in D:\SCM\R.txt -T -b 10000 -t "," -c -e D:\SCM\Errors.txt

and I got these errors:

I am not sure if the errors are because of the identity id column which is in my table design and not in my original text file or not. Because when I delete the identity id key from the table, the bcp works fine. But I want the bcp defines the identity id in the process of importing my file into table.

The sample text file:

Any help would be appreciated.