I am working on one deep learning model where I am trying to combine two different model's output :

The overall structure is like this :

So the first model takes one matrix, for example [ 10 x 30 ]

#input 1

input_text = layers.Input(shape=(1,), dtype="string")

embedding = ElmoEmbeddingLayer()(input_text)

model_a = Model(inputs = [input_text] , outputs=embedding)

# shape : [10,50]

Now the second model takes two input matrix :

X_in = layers.Input(tensor=K.variable(np.random.uniform(0,9,[10,32])))

M_in = layers.Input(tensor=K.variable(np.random.uniform(1,-1,[10,10]))

md_1 = New_model()([X_in, M_in]) #new_model defined somewhere

model_s = Model(inputs = [X_in, A_in], outputs = md_1)

# shape : [10,50]

I want to make these two matrices trainable like in TensorFlow I was able to do this by :

matrix_a = tf.get_variable(name='matrix_a',

shape=[10,10],

dtype=tf.float32,

initializer=tf.constant_initializer(np.array(matrix_a)),trainable=True)

I am not getting any clue how to make those matrix_a and matrix_b trainable and how to merge the output of both networks then give input.

I went through this question But couldn't find an answer because their problem statement is different from mine.

What I have tried so far is :

#input 1

input_text = layers.Input(shape=(1,), dtype="string")

embedding = ElmoEmbeddingLayer()(input_text)

model_a = Model(inputs = [input_text] , outputs=embedding)

# shape : [10,50]

X_in = layers.Input(tensor=K.variable(np.random.uniform(0,9,[10,10])))

M_in = layers.Input(tensor=K.variable(np.random.uniform(1,-1,[10,100]))

md_1 = New_model()([X_in, M_in]) #new_model defined somewhere

model_s = Model(inputs = [X_in, A_in], outputs = md_1)

# [10,50]

#tranpose second model output

tranpose = Lambda(lambda x: K.transpose(x))

agglayer = tranpose(md_1)

# concat first and second model output

dott = Lambda(lambda x: K.dot(x[0],x[1]))

kmean_layer = dotter([embedding,agglayer])

# input

final_model = Model(inputs=[input_text, X_in, M_in], outputs=kmean_layer,name='Final_output')

final_model.compile(loss = 'categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

final_model.summary()

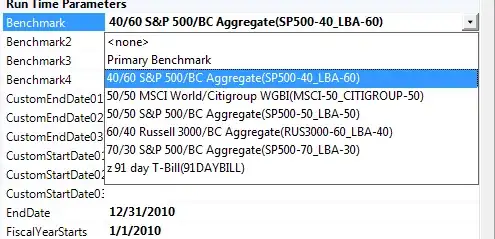

Overview of the model :

Update:

Model b

X = np.random.uniform(0,9,[10,32])

M = np.random.uniform(1,-1,[10,10])

X_in = layers.Input(tensor=K.variable(X))

M_in = layers.Input(tensor=K.variable(M))

layer_one = Model_b()([M_in, X_in])

dropout2 = Dropout(dropout_rate)(layer_one)

layer_two = Model_b()([layer_one, X_in])

model_b_ = Model([X_in, M_in], layer_two, name='model_b')

model a

length = 150

dic_size = 100

embed_size = 12

input_text = Input(shape=(length,))

embedding = Embedding(dic_size, embed_size)(input_text)

embedding = LSTM(5)(embedding)

embedding = Dense(10)(embedding)

model_a = Model(input_text, embedding, name = 'model_a')

I am merging like this:

mult = Lambda(lambda x: tf.matmul(x[0], x[1], transpose_b=True))([embedding, model_b_.output])

final_model = Model(inputs=[model_b_.input[0],model_b_.input[1],model_a.input], outputs=mult)

Is it right way to matmul two keras model?

I don't know if I am merging the output correctly and the model is correct.

I would greatly appreciate it if anyone kindly gives me some advice on how should I make that matrix trainable and how to merge the model's output correctly then give input.

Thanks in advance!