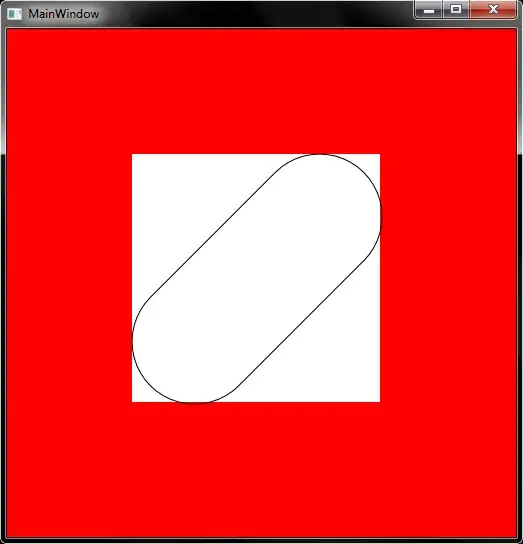

I was trying to draw pixel perfect bitmaps using quads in OpenGL... and to my surprise, a very important row of pixels was missing: (green is ok, red is bad)

The sizes of the quads were 30x30 px, with the red 2x2 in the top left corner.

- Can someone explain what is happening?

- How does OpenGL decide where to put pixels?

- Which correction should I choose?

The texture uses GL_NEAREST.

glTexEnvi(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_REPLACE);

I don't use glViewport and glOrtho. Instead, I send the screen dimensions through uniforms to the shaders:

uniform float cw;

uniform float ch;

...

gl_Position = vec4( -1.0f + 2.0f*(in_pos.x + in_offset.x)/cw,

1.0f - 2.0f*(in_pos.y + in_offset.y)/ch,

0.0f, 1.0f);

But that doesn't seem to be the source of the problem because when I add (0.5,0.5) in the shader (equivalent to the 3rd case), the problem goes away...

Ok, now I realize that the third option doesn't work either: