I have installed Keras with gpu support in R based on Tensorflow with gpu support. This is installed with these steps:

If I run the Bosting housing example code from the book Deep learning with R, I receive this screen:

Can I conclude that the code runs on the GPU?

Or is this line from the picture above giving an error:

GPU libraries are statically linked, skip dlopen check.

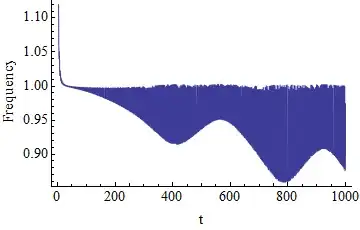

During running the code the GPU is running only on 3% of capacity while the CPU is running on 20-25%. The code is NOT running faster than while I initially did run the code without installing GPU support.

Thank you!