I am trying to find an answer to the following problem:

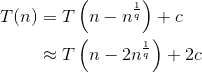

T(n) = T(n - n^(1/q)), q > 2

T(c) = O(1), for a constant c

What I am interested in are recursive problems, which do not branch and do not have any auxiliary calculations. Something similar to this problem. The difference is that the amount by which my problem is going to be reduced also gets smaller in each recursive call. I've tried to solve this via an iterative approach leading to this:

T(n) = T(n - n^(1/q) =

= T[n - n^(1/q) - [n - n^(1/q)]^(1/q)] =

= ....

for which I cannot really find a reasonable expansion. I therefor tried the substitution method for T(n) \in O(n) and T(n) \in O(log(n)), which both hold, if I haven't made any mistake.

Given that I can now only assume that T(n) = T(n - n^(1/q)) \in O(log(n)), for q > 2, which seems reasonable, since it is very similar to binary search.

Sadly I haven't really covered such recursions, neither do I have a good idea of applications following such recursions.

Given all of that my questions are:

Similar problems are the following: T(n) = x + T(n-log(n)) and T(n) = T(n^(1/2)) + T(n-n^(1/2)) + n

Edit: Removed wrong expansion.