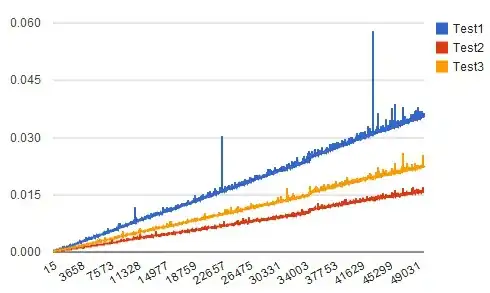

Using two different methods in XGBOOST feature importance, gives me two different most important features, which one should be believed?

Which method should be used when? I am confused.

Setup

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

import xgboost as xgb

df = sns.load_dataset('mpg')

df = df.drop(['name','origin'],axis=1)

X = df.iloc[:,1:]

y = df.iloc[:,0]

Numpy arrays

# fit the model

model_xgb_numpy = xgb.XGBRegressor(n_jobs=-1,objective='reg:squarederror')

model_xgb_numpy.fit(X.to_numpy(), y.to_numpy())

plt.bar(range(len(model_xgb_numpy.feature_importances_)), model_xgb_numpy.feature_importances_)

Pandas dataframe

# fit the model

model_xgb_pandas = xgb.XGBRegressor(n_jobs=-1,objective='reg:squarederror')

model_xgb_pandas.fit(X, y)

axsub = xgb.plot_importance(model_xgb_pandas)

Problem

Numpy method shows 0th feature cylinder is most important. Pandas method shows model year is most important. Which one is the CORRECT most important feature?