I am training a neural network (in C++, without any additional library), to learn a random wiggly function:

f(x)=0.2+0.4x2+0.3sin(15x)+0.05cos(50x)

Plotted in Python as:

lim = 500

for i in range(lim):

x.append(i)

p = 2*3.14*i/lim

y.append(0.2+0.4*(p*p)+0.3*p*math.sin(15*p)+0.05*math.cos(50*p))

plt.plot(x,y)

that corresponds to a curve as :

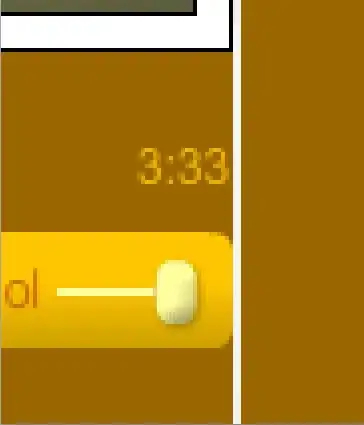

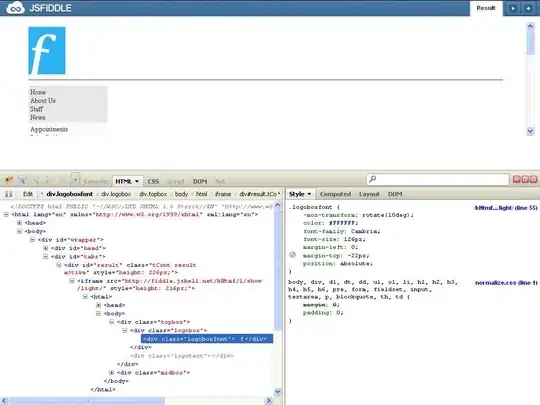

The same neural network has successfully approximated the sine function quite well with a single hidden layer(5 neurons), tanh activation. But, I am unable to understand what's going wrong with the wiggly function. Although the Mean Square Error seems to dip.(**The error has been scaled up by 100 for visibility):

And this is the expected (GREEN) vs predicted (RED) graph.

I doubt the normalization. This is how I did it:

Generated training data as:

int numTrainingSets = 100;

double MAXX = -9999999999999999;

for (int i = 0; i < numTrainingSets; i++)

{

double p = (2*PI*(double)i/numTrainingSets);

training_inputs[i][0] = p; //INSERTING DATA INTO i'th EXAMPLE, 0th INPUT (Single input)

training_outputs[i][0] = 0.2+0.4*pow(p, 2)+0.3*p*sin(15*p)+0.05*cos(50*p); //Single output

///FINDING NORMALIZING FACTOR (IN INPUT AND OUTPUT DATA)

for(int m=0; m<numInputs; ++m)

if(MAXX < training_inputs[i][m])

MAXX = training_inputs[i][m]; //FINDING MAXIMUM VALUE IN INPUT DATA

for(int m=0; m<numOutputs; ++m)

if(MAXX < training_outputs[i][m])

MAXX = training_outputs[i][m]; //FINDING MAXIMUM VALUE IN OUTPUT DATA

///NORMALIZE BOTH INPUT & OUTPUT DATA USING THIS MAXIMUM VALUE

////DO THIS FOR INPUT TRAINING DATA

for(int m=0; m<numInputs; ++m)

training_inputs[i][m] /= MAXX;

////DO THIS FOR OUTPUT TRAINING DATA

for(int m=0; m<numOutputs; ++m)

training_outputs[i][m] /= MAXX;

}

This is what the model trains on. The validation/test data is generated as follows:

int numTestSets = 500;

for (int i = 0; i < numTestSets; i++)

{

//NORMALIZING TEST DATA USING THE SAME "MAXX" VALUE

double p = (2*PI*i/numTestSets)/MAXX;

x.push_back(p); //FORMS THE X-AXIS FOR PLOTTING

///Actual Result

double res = 0.2+0.4*pow(p, 2)+0.3*p*sin(15*p)+0.05*cos(50*p);

y1.push_back(res); //FORMS THE GREEN CURVE FOR PLOTTING

///Predicted Value

double temp[1];

temp[0] = p;

y2.push_back(MAXX*predict(temp)); //FORMS THE RED CURVE FOR PLOTTING, scaled up to de-normalize

}

Is this normalizing right? If yes, what could probably go wrong? If no, what should be done?