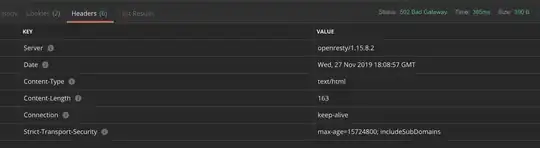

I have a GET request URL into a service on my kubernetes that's ~9k long and it seems like the request is getting stuck in Kubernetes's ingress. When I tried calling the url from within the docker or from other docker in the cluster it works fine. However when I go through a domain name I'm getting the following response header:

3 Answers

I think the parameter you must modify is Client Body Buffer Size

Sets buffer size for reading client request body per location. In case the request body is larger than the buffer, the whole body or only its part is written to a temporary file. By default, buffer size is equal to two memory pages. This is 8K on x86, other 32-bit platforms, and x86-64. It is usually 16K on other 64-bit platforms. This annotation is applied to each location provided in the ingress rule

nginx.ingress.kubernetes.io/client-body-buffer-size: "1000" # 1000 bytes

nginx.ingress.kubernetes.io/client-body-buffer-size: 1k # 1 kilobyte

nginx.ingress.kubernetes.io/client-body-buffer-size: 1K # 1 kilobyte

nginx.ingress.kubernetes.io/client-body-buffer-size: 1m # 1 megabyte

So you must add an annotation to your nginx ingress config.

- 11,492

- 5

- 29

- 37

- 774

- 4

- 9

-

We ran into a similar issue and this did not help. Event with increasing the buffer sizes using nginx-configmap did not work. – alexzimmer96 Apr 22 '20 at 11:48

In my case, I had to set http2_max_header_size and http2_max_field_size in my ingress server-snippet annotation. For example:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/server-snippet: |

http2_max_header_size 16k;

http2_max_field_size 16k;

I was getting ERR_CONNECTION_CLOSED and ERR_FAILED in Google Chrome and "empty response" using curl, but the backend would work if accessed directly from the cluster network.

Assigning client-header-buffer-size or large-client-header-buffers in the ingress controller ConfigMap didn't seem to work for me either, but I realized that curl would do it if using HTTP 1.1 (curl --http1.1)

- 31

- 4

- Find the configmap name in the nginx ingress controller pod describition

kubectl -n utility describe pods/test-nginx-ingress-controller-584dd58494-d8fqr |grep configmap

--configmap=test-namespace/test-nginx-ingress-controller

Note: In my case, the namespace is "test-namespace" and the configmap name is "test-nginx-ingress-controller"

- Create a configmap yaml

cat << EOF > test-nginx-ingress-controller-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: test-nginx-ingress-controller

namespace: test-namespace

data:

large-client-header-buffers: "4 16k"

EOF

Note: Please replace the namespace and configmap name as per finding in the step 1

- Deploy the configmap yaml

kubectl apply -f test-nginx-ingress-controller-configmap.yaml

Then you will see the change is updated to nginx controller pod after mins

i.g.

kubectl -n test-namespace exec -it test-nginx-ingress-controller-584dd58494-d8fqr -- cat /etc/nginx/nginx.conf|grep large

large_client_header_buffers 4 16k;

Thanks to the sharing by NeverEndingQueue in How to use ConfigMap configuration with Helm NginX Ingress controller - Kubernetes

- 405

- 4

- 8