I'm working on some complex sales analytics, which is very convoluted ... and boring ...

So for this question I'll use a fun, sugary metaphor: vending machines.

But my actual tables are structured the same way.

(You can assume there's plenty of indexing, constraints, etc.)

- BASIC TABLE #1 - INVENTORY

Let's say we have a table containing vending machine inventory data.

This table simply shows exactly how many, of each type of candy, is currently available in each vending machine.

I know, normally, there would be an ITEM_TYPE table containing rows for 'Snickers', 'Milky Way' etc. but that isn't how our tables are constructed, for multiple reasons.

In reality, it's not a product count, but instead it's pre-aggregated sales data: "Pipeline Total", "Forecast Total", etc.

So a simple table with separate columns for different "types" of totals, is what I have to work with.

For this example, I also added some text columns, to demonstrate that I have to account for a variety of datatypes.

(This complicates things.)

Except for ID, all columns are nullable - this is a real issue.

As far as we're concerned, if the column is NULL, then NULL is the official value we need to use for analytics and reporting.

CREATE table "VENDING_MACHINES" (

"ID" NUMBER NOT NULL ENABLE,

"SNICKERS_COUNT" NUMBER,

"MILKY_WAY_COUNT" NUMBER,

"TWIX_COUNT" NUMBER,

"SKITTLES_COUNT" NUMBER,

"STARBURST_COUNT" NUMBER,

"SWEDISH_FISH_COUNT" NUMBER,

"FACILITIES_ADDRESS" VARCHAR2(100),

"FACILITIES_CONTACT" VARCHAR2(100),

CONSTRAINT "VENDING_MACHINES_PK" PRIMARY KEY ("ID") USING INDEX ENABLE

)

/

Example data:

INSERT INTO VENDING_MACHINES (ID, SNICKERS_COUNT, MILKY_WAY_COUNT, TWIX_COUNT,

SKITTLES_COUNT, STARBURST_COUNT, SWEDISH_FISH_COUNT,

FACILITIES_ADDRESS, FACILITIES_CONTACT)

SELECT 225, 11, 15, 14, 0, NULL, 13, '123 Abc Street', 'Steve' FROM DUAL UNION ALL

SELECT 349, NULL, 7, 3, 11, 8, 7, NULL, '' FROM DUAL UNION ALL

SELECT 481, 8, 4, 0, NULL, 14, 3, '1920 Tenaytee Way', NULL FROM DUAL UNION ALL

SELECT 576, 4, 2, 8, 4, 9, NULL, '', 'Angela' FROM DUAL

- BASIC TABLE #2 - CHANGE LOG

Vending machines will periodically connect to the database and update their inventory records.

Maybe they update every time somebody buys something, or maybe they update every 30 minutes, or maybe they only update when someone refills the candy - honestly it doesn't matter.

What does matter is, whenever a record is updated in the VENDING_MACHINES table, a trigger is executed which logs every individual change in a separate log table VENDING_MACHINES_CHANGE_LOG.

This trigger has already been written, and it works great.

(If a column is "updated" with the same value that was already present, the change should be ignored by the trigger.)

A separate row is logged for each column that was modified in the VENDING_MACHINES table (except for ID).

Therefore, if a brand new row is inserted in the VENDING_MACHINES table (i.e. a new vending machine), eight rows will be logged in the VENDING_MACHINES_CHANGE_LOG table - one for each non-ID column in VENDING_MACHINES.

(In my real-world scenario, there are 90+ columns being tracked.

But usually only one or two columns are being updated at any given time, so it doesn't get out of hand.)

This "change log" is intended to be a permanent history of the VENDING_MACHINES table, so we won't create a foreign key constraint - if a row is deleted from VENDING_MACHINES we want to retain orphaned historical records in the change log.

Also, Apex doesn't support ON UPDATE CASCADE (?), so the trigger has to check for updates to the ID column, and manually propagate the update throughout related tables (e.g. the change log).

CREATE table "VENDING_MACHINE_CHANGE_LOG" (

"ID" NUMBER NOT NULL ENABLE,

"CHANGE_TIMESTAMP" TIMESTAMP(6) NOT NULL ENABLE,

"VENDING_MACHINE_ID" NUMBER NOT NULL ENABLE,

"MODIFIED_COLUMN_NAME" VARCHAR2(30) NOT NULL ENABLE,

"MODIFIED_COLUMN_TYPE" VARCHAR2(30) GENERATED ALWAYS AS

(CASE "MODIFIED_COLUMN_NAME" WHEN 'FACILITIES_ADDRESS' THEN 'TEXT'

WHEN 'FACILITIES_CONTACT' THEN 'TEXT'

ELSE 'NUMBER' END) VIRTUAL NOT NULL ENABLE,

"NEW_NUMBER_VALUE" NUMBER,

"NEW_TEXT_VALUE" VARCHAR2(4000),

CONSTRAINT "VENDING_MACHINE_CHANGE_LOG_CK" CHECK

("MODIFIED_COLUMN_NAME" IN('SNICKERS_COUNT', 'MILKY_WAY_COUNT', 'TWIX_COUNT',

'SKITTLES_COUNT', 'STARBURST_COUNT', 'SWEDISH_FISH_COUNT',

'FACILITIES_ADDRESS', 'FACILITIES_CONTACT')) ENABLE,

CONSTRAINT "VENDING_MACHINE_CHANGE_LOG_PK" PRIMARY KEY ("ID") USING INDEX ENABLE,

CONSTRAINT "VENDING_MACHINE_CHANGE_LOG_UK" UNIQUE ("CHANGE_TIMESTAMP",

"VENDING_MACHINE_ID",

"MODIFIED_COLUMN_NAME") USING INDEX ENABLE

/* No foreign key, since we want this change log to be orphaned and preserved.

Also, apparently Apex doesn't support ON UPDATE CASCADE for some reason? */

)

/

Change log example data:

INSERT INTO VENDING_MACHINE_CHANGE_LOG (ID, CHANGE_TIMESTAMP, VENDING_MACHINE_ID,

MODIFIED_COLUMN_NAME, NEW_NUMBER_VALUE, NEW_TEXT_VALUE)

SELECT 167, '11/06/19 05:18', 481, 'MILKY_WAY_COUNT', 5, NULL FROM DUAL UNION ALL

SELECT 168, '11/06/19 05:21', 225, 'SWEDISH_FISH_COUNT', 1, NULL FROM DUAL UNION ALL

SELECT 169, '11/06/19 05:40', 481, 'FACILITIES_ADDRESS', NULL, NULL FROM DUAL UNION ALL

SELECT 170, '11/06/19 05:49', 481, 'STARBURST_COUNT', 4, NULL FROM DUAL UNION ALL

SELECT 171, '11/06/19 06:09', 576, 'FACILITIES_CONTACT', NULL, '' FROM DUAL UNION ALL

SELECT 172, '11/06/19 06:25', 481, 'SWEDISH_FISH_COUNT', 7, NULL FROM DUAL UNION ALL

SELECT 173, '11/06/19 06:40', 481, 'FACILITIES_CONTACT', NULL, 'Audrey' FROM DUAL UNION ALL

SELECT 174, '11/06/19 06:46', 576, 'SNICKERS_COUNT', 13, NULL FROM DUAL UNION ALL

SELECT 175, '11/06/19 06:55', 576, 'FACILITIES_ADDRESS', NULL, '388 Holiday Street' FROM DUAL UNION ALL

SELECT 176, '11/06/19 06:59', 576, 'SWEDISH_FISH_COUNT', NULL, NULL FROM DUAL UNION ALL

SELECT 177, '11/06/19 07:00', 349, 'MILKY_WAY_COUNT', 3, NULL FROM DUAL UNION ALL

SELECT 178, '11/06/19 07:03', 481, 'TWIX_COUNT', 8, NULL FROM DUAL UNION ALL

SELECT 179, '11/06/19 07:11', 349, 'TWIX_COUNT', 15, NULL FROM DUAL UNION ALL

SELECT 180, '11/06/19 07:31', 225, 'FACILITIES_CONTACT', NULL, 'William' FROM DUAL UNION ALL

SELECT 181, '11/06/19 07:49', 576, 'FACILITIES_CONTACT', NULL, 'Brian' FROM DUAL UNION ALL

SELECT 182, '11/06/19 08:28', 481, 'SNICKERS_COUNT', 0, NULL FROM DUAL UNION ALL

SELECT 183, '11/06/19 08:38', 481, 'SKITTLES_COUNT', 7, '' FROM DUAL UNION ALL

SELECT 184, '11/06/19 09:04', 349, 'MILKY_WAY_COUNT', 10, NULL FROM DUAL UNION ALL

SELECT 185, '11/06/19 09:21', 481, 'SNICKERS_COUNT', NULL, NULL FROM DUAL UNION ALL

SELECT 186, '11/06/19 09:33', 225, 'SKITTLES_COUNT', 11, NULL FROM DUAL UNION ALL

SELECT 187, '11/06/19 09:45', 225, 'FACILITIES_CONTACT', NULL, NULL FROM DUAL UNION ALL

SELECT 188, '11/06/19 10:16', 481, 'FACILITIES_CONTACT', 4, 'Lucy' FROM DUAL UNION ALL

SELECT 189, '11/06/19 10:25', 481, 'SNICKERS_COUNT', 10, NULL FROM DUAL UNION ALL

SELECT 190, '11/06/19 10:57', 576, 'SWEDISH_FISH_COUNT', 12, NULL FROM DUAL UNION ALL

SELECT 191, '11/06/19 10:59', 225, 'MILKY_WAY_COUNT', NULL, NULL FROM DUAL UNION ALL

SELECT 192, '11/06/19 11:11', 481, 'STARBURST_COUNT', 6, 'Stanley' FROM DUAL UNION ALL

SELECT 193, '11/06/19 11:34', 225, 'SKITTLES_COUNT', 8, NULL FROM DUAL UNION ALL

SELECT 194, '11/06/19 11:39', 349, 'FACILITIES_CONTACT', NULL, 'Mark' FROM DUAL UNION ALL

SELECT 195, '11/06/19 11:42', 576, 'SKITTLES_COUNT', 8, NULL FROM DUAL UNION ALL

SELECT 196, '11/06/19 11:56', 225, 'TWIX_COUNT', 2, NULL FROM DUAL

- DESIRED RESULT - QUERY (VIEW) TO RECONSTRUCT HISTORICAL TABLE ROWS FROM CHANGE LOG

I need to build a view that reconstructs the complete historical VENDING_MACHINES table, using only data from the VENDING_MACHINE_CHANGE_LOG table.

i.e. Since the change log rows are allowed to be orphaned, rows that have previously been deleted from VENDING_MACHINES should reappear.

The resulting view should allow me to retreive any VENDING_MACHINE row, exactly as it existed at any specific point in history.

The example data for VENDING_MACHINE_CHANGE_LOG is very short, and not quite enough to produce a complete result ...

But it should be enough to demonstrate the desired outcome.

Ultimately I think analytical functions will be required.

But I'm new to SQL analytical functions, and I'm new to Oracle and Apex as well.

So I'm not sure how to approach this - What's the best way to reconstruct the original table rows?

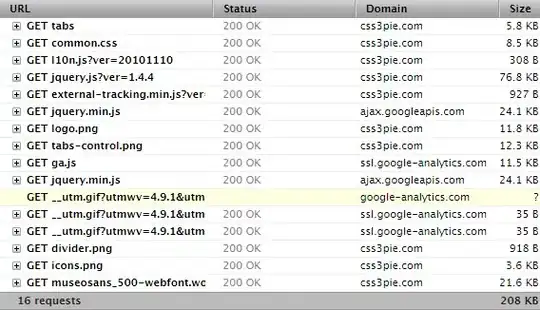

Here's what the desired result should look like (sorted by CHANGE_TIMESTAMP):

And here is the same desired result, additionally sorted by VENDING_MACHINE_ID:

I have built a simple query to pull the most recent column values for every VENDING_MACHINE_ID, but I don't think this method is suitable for this enormous task.

I think I need to use analytical functions instead, to get better performance and flexibility. (Or maybe I'm wrong?)

select vmcl.ID,

vmcl.CHANGE_TIMESTAMP,

vmcl.VENDING_MACHINE_ID,

vmcl.MODIFIED_COLUMN_NAME,

vmcl.MODIFIED_COLUMN_TYPE,

vmcl.NEW_NUMBER_VALUE,

vmcl.NEW_TEXT_VALUE

from ( select sqvmcl.VENDING_MACHINE_ID,

sqvmcl.MODIFIED_COLUMN_NAME,

max(sqvmcl.CHANGE_TIMESTAMP) as LAST_CHANGE_TIMESTAMP

from VENDING_MACHINE_CHANGE_LOG sqvmcl

where sqvmcl.CHANGE_TIMESTAMP <= /*[Current timestamp, or specified timestamp]*/

group by sqvmcl.VENDING_MACHINE_ID, sqvmcl.MODIFIED_COLUMN_NAME ) sq

left join VENDING_MACHINE_CHANGE_LOG vmcl on vmcl.VENDING_MACHINE_ID = sq.VENDING_MACHINE_ID

and vmcl.MODIFIED_COLUMN_NAME = sq.MODIFIED_COLUMN_NAME

and vmcl.CHANGE_TIMESTAMP = sq.LAST_CHANGE_TIMESTAMP

Notice the left join specifically hits the unique index for the VENDING_MACHINE_CHANGE_LOG table - this is by design.