I know that stochastic gradient descent always gives different results. What are the best practices to reduce this variance today? I tried to predict a simple function with two different approaches and every time I train them I see very different results.

Input data:

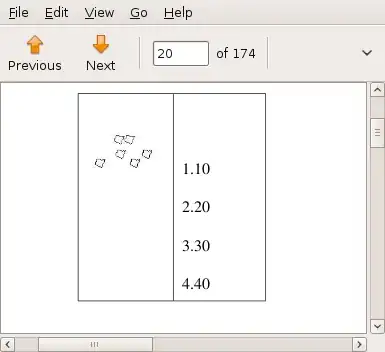

def plot(model_out):

fig, ax = plt.subplots()

ax.grid(True, which='both')

ax.axhline(y=0, color='k', linewidth=1)

ax.axvline(x=0, color='k', linewidth=1)

ax.plot(x_line, y_line, c='g', linewidth=1)

ax.scatter(inputs, targets, c='b', s=8)

ax.scatter(inputs, model_out, c='r', s=8)

a = 5.0; b = 3.0; x_left, x_right = -16., 16.

NUM_EXAMPLES = 200

noise = tf.random.normal((NUM_EXAMPLES,1))

inputs = tf.random.uniform((NUM_EXAMPLES,1), x_left, x_right)

targets = a * tf.sin(inputs) + b + noise

x_line = tf.linspace(x_left, x_right, 500)

y_line = a * tf.sin(x_line) + b

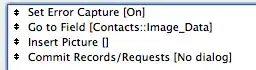

Keras training:

model = tf.keras.Sequential()

model.add(tf.keras.layers.Dense(50, activation='relu', input_shape=(1,)))

model.add(tf.keras.layers.Dense(50, activation='relu'))

model.add(tf.keras.layers.Dense(1))

model.compile(loss='mse', optimizer=tf.keras.optimizers.Adam(0.01))

model.fit(inputs, targets, batch_size=200, epochs=2000, verbose=0)

print(model.evaluate(inputs, targets, verbose=0))

plot(model.predict(inputs))

Manual training:

model = tf.keras.Sequential()

model.add(tf.keras.layers.Dense(50, activation='relu', input_shape=(1,)))

model.add(tf.keras.layers.Dense(50, activation='relu'))

model.add(tf.keras.layers.Dense(1))

optimizer = tf.keras.optimizers.Adam(0.01)

@tf.function

def train_step(inpt, targ):

with tf.GradientTape() as g:

model_out = model(inpt)

model_loss = tf.reduce_mean(tf.square(tf.math.subtract(targ, model_out)))

gradients = g.gradient(model_loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

return model_loss

train_ds = tf.data.Dataset.from_tensor_slices((inputs, targets))

train_ds = train_ds.repeat(2000).batch(200)

def train(train_ds):

for inpt, targ in train_ds:

model_loss = train_step(inpt, targ)

tf.print(model_loss)

train(train_ds)

plot(tf.squeeze(model(inputs)))