I've converted a dataframe to an RDD:

val rows: RDD[Row] = df.orderBy($"Date").rdd

And now I'm trying to convert it back:

val df2 = spark.createDataFrame(rows)

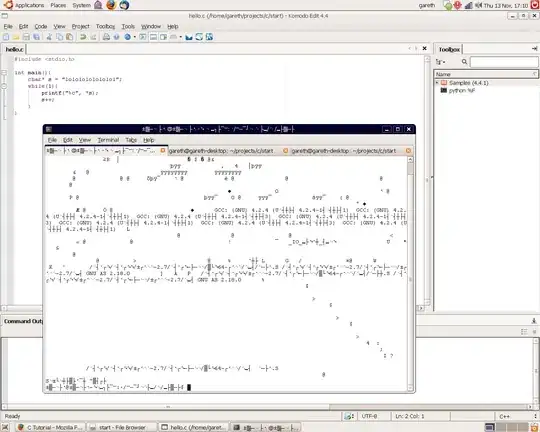

But I'm getting an error:

Edit:

rows.toDF()

Also produces an error:

Cannot resolve symbol toDF

Even though I included this line earlier:

import spark.implicits._

Full code:

import org.apache.spark._

import org.apache.spark.sql._

import org.apache.spark.sql.expressions._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

import scala.util._

import org.apache.spark.mllib.rdd.RDDFunctions._

import org.apache.spark.rdd._

object Playground {

def main(args: Array[String]): Unit = {

val spark = SparkSession

.builder

.appName("Playground")

.config("spark.master", "local")

.getOrCreate()

import spark.implicits._

val sc = spark.sparkContext

val df = spark.read.csv("D:/playground/mre.csv")

df.show()

val rows: RDD[Row] = df.orderBy($"Date").rdd

val df2 = spark.createDataFrame(rows)

rows.toDF()

}

}