Consider following table

id || C1 || C2 || C3 || Timestamp

--------------------------------

1 || a || b || 1 || t1

2 || a || b || 1 || t2

3 || a || b || 2 || t3

4 || a || b || 2 || t4

5 || a || b || 2 || t5

6 || b || d || 3 || t6

7 || b || d || 3 || t7

C3 contains a distinct value for a given combination of C1 and C2. A given combination of C1, C2 and C3 can have multiple rows with differing timestamps(like rows 4,5).

However, duplication has lead to creation of more than one C3 for a given C1 and C2 pair. So here rows 3, 4 and 5 violated the rule as a new C3(2) was introduced. C3 is always unique given C1 and C2.

I want to keep the latest( w.r.t timestamp) combination of C1, C2, C3 and delete all the older ones. So only rows 3,4,5 should exist and 1,2 should be removed.

By latest I mean that older C3 values for given C1,C2 should be removed, so in this case rows 1,2 are removed but 3,4,5 remain.

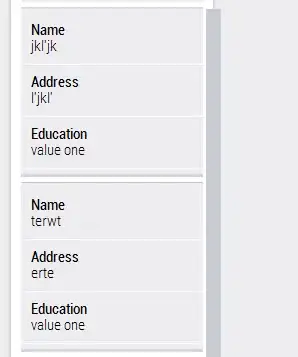

For example, given unique nid and original columns, all the rows with mock_id YR should be removed.

I have tried various queries and joins, but unable to figure out something that would solve this exact problem.